Spark computing for machine learning

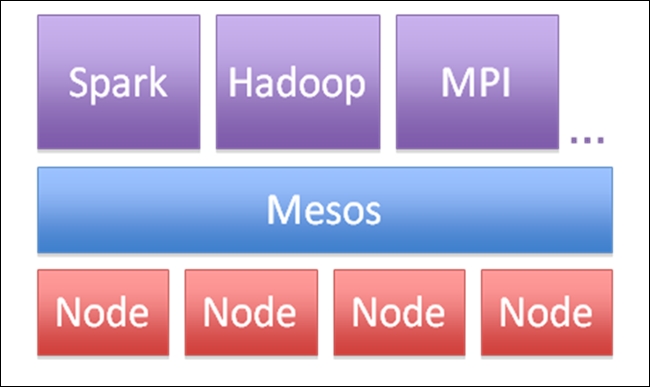

With its innovations on RDD and in-memory processing, Apache Spark has truly made distributed computing easily accessible to data scientists and machine learning professionals. According to the Apache Spark team, Apache Spark runs on the Mesos cluster manager, letting it share resources with Hadoop and other applications. Therefore, Apache Spark can read from any Hadoop input source like HDFS.

For the above, the Apache Spark computing model is very suitable to distributed computing for machine learning. Especially for rapid interactive machine learning, parallel computing, and complicated modelling at scale, Apache ...

Get Apache Spark Machine Learning Blueprints now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.