In this recipe, we will explore the fundamental concept behind mathematical optimization using simple derivatives before introducing Gradient Descent (first order derivative) and L-BFGS, which is a Hessian free quasi-Newton method.

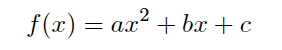

We will examine a sample quadratic cost/error function and show how to find the minimum or maximum with just math.

We will use both the closed form (vertex formula) and derivative method (slope) to find the minima, but we will defer to later recipes in this chapter to introduce numerical optimization techniques, such Gradient ...