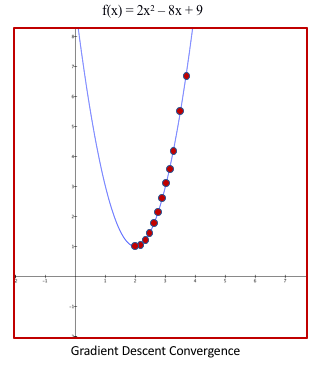

The Gradient Descent technique takes advantage of the fact that the gradient of the function (first derivative in this case) points to the direction of the descent. Conceptually, Gradient Descent (GD) optimizes a cost or error function to search for the best parameter for the model. The following figure demonstrates the iterative nature of the gradient descent:

We started the recipe by defining the step size (the learning rate), tolerance, the function to be differentiated, and the function's first derivative, and then proceeded to iterate and get closer to the target minima of zero from the initial guess (13 in this case). ...