THIS CHAPTER SHOWS HOW YOU CAN INCORPORATE USABILITY DESIGN AND EVALUATION into the life cycle of privacy and security solutions. Here we provide you with an overview of critical-path human-computer interaction (HCI) activities that occur during the development of successful solutions and their maintenance after release. We will point you to key publications and references that you can use to gain a more in-depth understanding of HCI methods and practices in evaluating the usability of your security and privacy systems. And we will walk you through the use of these methods in a case study of a security application as well as a case study of two years of research on privacy tools for authoring and implementing privacy policies.

Of course, this chapter alone cannot make you proficient in using HCI methods. But it will guide you in understanding the value that HCI work can contribute to the success of your product.

We recommend that most projects bring an HCI expert on board early in the project’s development, and that the HCI expert be a full team member. Working together, the project team should define the usability activities as a critical development path. We have seen many successful examples where the HCI lead’s expertise was leveraged to train and educate the product team so that team members completed parts of the HCI project plan with consultation and collaboration from the HCI expert. We have also seen several examples of skilled computer scientists and programmers who gradually became HCI specialists, frequently with great success. One of this chapter’s co-authors, Carolyn, has, in fact, followed such a career path!

Properly applied, HCI methods and techniques elicit and identify the useful information in each phase of the project life cycle . These activities can facilitate a more accurate and complete project definition during the requirements phase and an improved project design during the design phase. During the development phase, they can improve solution performance and reduce development time and costs. The benefits of HCI and usability activities continue after release, including increased user productivity and satisfaction, reduced training, support, and error recovery costs, increased sales and revenue, and reduced maintenance costs.

Although many HCI techniques are general, there are unique aspects in the design of privacy and security systems that present challenges and opportunities.

First, a key issue to consider is that security and privacy are rarely the user’s main goal. Users value and want security and privacy, of course, but they regard them as only secondary to completing primary tasks like completing an online banking transaction or ordering medications. Users would like privacy and security systems and controls to be as transparent as possible. On the other hand, users want to be in control of the situation and understand what is happening. Therein lies the rub. As a consequence, the display of information and the interaction methods related to security and privacy need to be accessible if and when desired by the user, but they shouldn’t get in the way.

Second, as more of people’s interactions in daily life involve the use of computing technology and sensitive information, disparate types of users must be accommodated. Security solutions in particular have historically been designed with a highly trained technical user in mind. The user community has broadened extensively as organizational business processes have come to include new types of roles and users in the security and privacy area. Many compliance and policy roles in organizations are handled by legal and business process experts who have limited technical skills. Moreover, the end user base includes virtually everyone in the population. The functionality provided by the system for people with different roles must accommodate the skill sets of each. Security and privacy are requirements for doing business as an organization and must be done well, or the organization may lose the user as a customer—or worse.

Third, the negative impact that usability problems can have is higher for security and privacy applications than for many other types of systems. Complexity is at the very heart of many security and privacy solutions; from an HCI point of view, that complexity is the enemy of success. If a system is so complex that whole groups of users (e.g., technical users, business users, and end users) cannot understand it, costly errors will occur. There is a saying in the HCI field: “If the user cannot understand functionality, it doesn’t exist.” In the case of security and privacy, sophisticated systems that are badly designed may actually put users at more risk than if less sophisticated solutions were used. So, the increased risk of errors in this domain provides an even greater incentive to include HCI work in system research and development.

Fourth, users will need to be able to easily update security and privacy solutions to accommodate frequent changes in legislation and regulations. Different domains (e.g., health care, banking, government) frequently have unique requirements. Systems must be designed to enable easy and effective updates to them.

These are some of the challenges that provide a unique focus and strong incentive to include HCI in the system life cycle. You can probably think of more. Let’s now discuss in more detail the valuable role that HCI can play.

It is critical that the project team be able to define the product and product goals clearly and early on. This is called the requirements phase .

From a usability perspective, the product definition should address who the intended users of the product are, the types of tasks for which users will use the product, and the real-world situations (the “context”) in which the product will be used.

A variety of formal HCI methods are available to identify and define this information. For any specific product, the HCI expert selects the appropriate subset of methods to use, given the time, resources, risk, and business priorities involved. Some of these methods include:

- Interviews and surveys of end-user requirements

Used to understand what top concerns and unmet needs customers, target users, and organizational stakeholders would like addressed. When you begin to work in a new domain area, it is critical that you start with end-user interviews and surveys in order to gain firsthand knowledge of the major issues and opportunities from users in their own voices. Typically these surveys involve a fairly large sample—perhaps 30 to 100 participants. Ideally, you begin with a larger survey group and follow up with in-depth interviews of a smaller group of end users and other key stakeholders (e.g., decision makers who will not necessarily use the system). Identification and refinement of the end-user profile occur as part of this work.

- Focus groups

Used to understand and identify at a high level areas in which there are customer, target user, and other stakeholder concerns and unmet needs. Focus groups can augment surveys. An independent facilitator guides group discussions with samples of end users and probes issues in the domain area in greater depth than surveys are generally able to do. If resources are available, you might use focus groups before you conduct individual interviews.

- Field studies of end-user work context

Used to understand and observe how current technology and business practices are being employed by users to accomplish their goals today. Find out what’s working and what’s not. Depending on your resources, expertise, and time available, you might conduct limited field studies to complement and validate the information gained from the preceding methods, or you might use a large sample of field studies to gain all of your information and forego the preceding steps.

- Task analysis

Used to understand the core and infrequent tasks that users need or would like to complete to accomplish their goals. These tasks will be identified during interviews, focus groups, and field studies of end-user work context. The experts gathering the information will probe to gain the context and relative importance of the items in the set of tasks. Also, end users will provide information to help set the usability objectives of the system (see the description of deliverables later in this section).

- Benchmark studies

Used to understand current or baseline user performance using available manual or automated processes to complete the types of core tasks that the product will address. Benchmark data can be collected during field studies of end users or in controlled laboratory studies. This is critical data that will help you to set the goals for the usability of the new system and enable you to measure the value of the improvement that the new system will deliver. Be sure to collect this data for business cases and other purposes.

- Competitive evaluations

Used to understand the capabilities available in the marketplace at the current time and identify the strengths and weaknesses of these solutions. As with the benchmark data, this data will help you to quantify the usability objectives and the value of the solution you are going to design; you will also design a better system after you understand the strengths and weaknesses of competitive systems.

As you can see, there are many kinds of methods that you can use to collect the end-user requirements information. As a result, you must make tradeoffs in how you collect and validate the information based on the time, resources, and skilled personnel that you have available to work on a project. In a perfect world, it would probably be best to do field studies of representative samples of the different types of end users (e.g., based on age, skills, experience, roles) in a representative sample of the locations (e.g., geographic, physical conditions) and contexts (e.g., quiet indoor setting versus a mobile, public, and noisy setting) in which the system would be appropriate for use. Practically speaking, though, there are always constraints. One approach is to triangulate or cross-validate the data: ask the same questions using a variety of methods and determine whether the end users are providing you similar or different data. Thus, starting with an email survey, followed by a sample of in-depth interviews and a sample of field observation of end users, you could validate the emerging end-user requirements while keeping within time and resource constraints.

Based on the analysis of the data from the usability engineering activities during the requirements phase described earlier, the project team will have the following deliverables:

- End-user requirements definition

The complete description of what is needed to meet the needs of the user who would like to accomplish certain tasks and goals in a specific domain.

- User profile definition

A description of the target users of the product, including general demographic information and specific information about skills, education, and training necessary to use the product.

- Core task scenarios

A small set of task scenarios that describe how the end users will be able to accomplish core tasks with the product to meet their goals.

- Usability objectives specification

A quantitative goal or set of goals that needs to be met for the user to be satisfied and productive using the product.[1] An example is “95% of the users will complete the sign-on task error-free within the first three attempts at the task.” The objective should surpass the results of the benchmark testing and competitive evaluations. It should also address a variety of goals: user goals for productivity and satisfaction, business goals for the streamlining of business processes, and organizational goals related to expense and risk (the cost of errors and user support). Usability objectives specification provides a means for clear communication and the development of consensus in the project team for the usability goal of the product.

These deliverables contribute to a clear and thoughtful statement about the scope of the development project, and clear objectives and measures with which to judge the success of designing and developing a usable product for the target users. HCI work in the requirements phase frequently improves communication within the development team and brings about consensus with respect to product definition in clear and quantitatively measurable terms. On numerous occasions, early HCI work on end-user requirements has surfaced large or complex issues and conflicting goals among the development team that could be resolved relatively quickly and at a cost that is much lower during the concept phase than it would be during system test or after release.[2]

Of course, conflicting goals may also exist in the customer organization. Discussions during the requirements phase can provide the opportunity to highlight and resolve these differences.

During the design and development phases, the project team needs to create a clear, high-level design for the product that will be implemented during development. From an HCI perspective, the key activities during these phases are:

- Initial design development

Based on the results of the HCI work during the concept phase, the HCI professional can create an initial user interface design that covers the interaction methods and flow that users will employ to complete the core task scenarios. This design will guide the development of prototypes , provide feedback to the development team, and give the project architect needed information for the system architecture.

- Ongoing data from users

Although there is a tendency to view gathering user data as an isolated activity, design and development require information from users on an ongoing basis. HCI professionals can and should be responsible for involving target system users with the development team on an ongoing basis throughout the life cycle. Users can participate in design walkthroughs and field and laboratory evaluations of prototypes. These sessions can be a valuable tool for understanding user needs and developing a system that addresses them.

- Prototype development (low, medium, and high fidelity)

The HCI professional can create a prototype of the user interface for the solution that illustrates how the end user would complete core task scenarios. Low-fidelity prototypes created early in the design and development phases might consist of static screenshots with a scenario script that walks target users through the completion of tasks. These early prototypes let the project team collect information from target users about macro-level design issues. As the design is refined, the project team can create medium-fidelity prototypes or high-fidelity prototypes that let target users have hands-on experience and provide finer-grained feedback on design issues and tradeoffs. A medium-fidelity prototype generally has certain paths through the overall design (that correspond to certain tasks) and the interaction methods (i.e., data input or access through use of voice, touch, keyboard and mouse, biometrics) working in a realistic manner, although the frontend may or may not be integrated in a real-time manner with the backend of the system. A high-fidelity prototype is a fully functional prototype of the system and may be the alpha-level version of the actual system code.

- Design walkthroughs with small groups of users

The HCI professional can facilitate design walkthrough sessions with small groups of target users. These walkthroughs are generally conducted early in the design and development phase with low-fidelity prototypes or static screenshots. It may be very valuable to iterate on the initial design and conduct a second round of design walkthrough sessions with new samples of target users to validate the design decisions made after the first round. The target users will be able to provide data on specific aspects and capabilities of the solution, as well as the usability of the system and their satisfaction with it. Target users will also provide information essential to updating the task scenarios and user profiles.

- Heuristic evaluations and other types of inspections

The project team may recruit HCI professionals who have expertise in the specific domain in order to have them adopt the role or roles of the target users of the system, to conduct heuristic reviews of the prototypes, and to identify issues for the project team to address. Think of these professionals as “double experts.” The heuristic review consists of the experts completing the core user scenarios in the roles of the various target users and identifying usability problems that people in those roles will face with the current design of the system. The project team may also find it valuable to have these experts review competitive offerings so that the team has additional input on how their product is likely to compare to the current competition.

- Usability tests (laboratory or field) with individual users

During development, HCI professionals can run usability evaluation sessions with one target user at a time employing medium- to high-fidelity prototypes of the solution that enable the users to have hands-on experience in using the prototyped system to complete their core task scenarios. These sessions may be conducted in a controlled usability laboratory where there is often a one-way mirror, letting members of the development team and other stakeholders observe the user interacting with the prototype firsthand. Alternatively, sessions may be conducted in the field. Although the field may have poor lighting, noise, interruptions, and a lack of privacy, it does represent the environment where the product will be deployed: testing the product in the field helps the development team to identify issues that are likely to be important later. Consider the tradeoffs between laboratory and field usability tests. If possible, it is best to use both types of tests in this phase of the project.

During these user sessions, the team should collect a variety of data including qualitative information (e.g., how hard it is to learn, their experience in using the system, and their satisfaction with it) and their quantitative performance data (e.g., time on task, completion rate, error-free rate). This data will enable the project team to determine where they stand in terms of meeting the product’s usability objectives. It is best to iterate on the design and collect the usability evaluation data until the usability objectives are reached. In practice, business needs and tradeoffs are made, and the data will provide a useful basis on which to make these decisions.

Performance data can also be used to determine the severity rating of the different identified issues through use of a Problem Severity Classification Matrix ,[3] which we describe in more detail in the first case study later in this chapter. The team can also learn whether the system as designed is good enough that the target user would like to use it, would purchase it, and how they would incorporate it into their lives. This more detailed and in-context design information from target users is very valuable and enables the project team to complete the micro-level design of the solution. From a project planning standpoint, consider that the usability tests in the design and development phases can be scheduled to dovetail with the appropriate unit, integration, and system testing.

- Prototype redesign

After a round of usability evaluation, analyze the data and present it to the project team. The whole project team can contribute to the decision-making process about which issues to tackle and how to redesign parts of the system to address them. A discussion of the problem priorities and of the different approaches to resolve those problems can be quite productive when drawing on the different skills and experience of the team members.

In the design and development phases, the main goal is to move from a fairly high-level design based on the early HCI activities in the requirements phase to a user-validated design of a highly usable system. The validated design has sufficient detail for development of the system. Usability problems in the early design are detected and resolved, and the final version of the system should meet all defined usability objectives prior to release.

Based on the analysis of the data from the usability engineering activities described earlier during the design and development phases, the project team will have the following deliverables:

- Validated and usable user interface design

The project team can be confident that the user interface design will be successful in deployment and release based on the iterative design and evaluation with target users during the design and development phases. The validation of the design with the target users demonstrates that all significant user requirements are addressed by the system. The validation will be based on meeting the usability objectives set for the system. These will be assessed based on user performance with the system and satisfaction ratings of the system by target users during the usability evaluation of the latest iteration of the system design.

- Validated user model of the task flow

Underlying the interface design is a model of how the target users perceive the task and their views of the flow of the task to reach the desired goal. In addition, user errors made during usability evaluation sessions are priceless to HCI practitioners in terms of highlighting differences or confusions in the design as it relates to the user model. This helps the entire project team understand and gain consensus about the design.

- Validated and updated core user task scenarios

The user task scenarios will be refined and validated during the iterative usability evaluations in the design and development phases of the project. The project team can be very comfortable that they are addressing the critical tasks of their target users.

- Validated and updated system requirements document

As part of the usability activities in the design and development phases, information emerges that will be used to update and validate the system requirements. This occurs through the technical discussions about the project goals, the analysis of tasks to be completed using the system, and the results of the usability evaluations.

- Validated and updated user profiles

The usability work with target users in the design and development phases provides an up-to-date and clear definition of the target users. The project team can be comfortable that they are developing the product for the correctly defined, intended users. This information is very valuable for communication and marketing purposes.

- Objective performance data about achievement of usability objectives

The project team will know exactly where they stand at the end of the development phase in terms of reaching the product usability objectives. As mentioned previously, in practice, tradeoffs are made in reaching these goals. The usability data provides very valuable data for these decision-making purposes.

- User interface style guide

If the organization is going to be developing a series of products in a particular market niche for a similar set of target users, it may be valuable to capture the high-level design decisions made for the user interface for use by other teams. In this way, the initial work with users during the concept phase can be leveraged.

During prerelease beta-testing and after release of the final version of the product, the product team needs to be informed of feedback from the customers who are using the product. This feedback will help the team maintain the product and can be very helpful in understanding when a new release is required. From an HCI perspective, the key activities in this phase are:

- Collection and analysis of customer data using online tools

Tools of this kind enable remote data collection and usability evaluation. With customer consent, online tools may collect usage data through unobtrusive means and may also pop up questionnaires to collect explicit feedback from users at certain points in the use of a product. Data from these online tools can be analyzed and may highlight important issues that HCI practitioners need to follow up on with customers in individual and small group HCI work in laboratory and field settings.

- Analysis of customer support calls regarding the product

As stated earlier, the customer support calls provide another channel for customer feedback to the product team. The HCI practitioner can work with the product team to prioritize the issues and requests. As time goes by after the release of the product, these calls may provide information that signals when a new release of the product is necessary.

- Analysis of user comments in email, list serves, or web forums about the product

These are further channels for customer feedback about problems and for the identification of new or extended capabilities that are needed to address the customers’ needs.

- Collection and analysis of survey and questionnaire data from users or through user group meetings

The activities described earlier may be supplemented by telephone or written surveys of customers about their satisfaction with the product and any issues or requests for new functionality that they would like to communicate to the product team. Having regularly scheduled user group meetings that customers can attend is another way to collect information about problems with the current product or new customer requirements regarding usability or functionality that evolve in the changing world in which we live.

Based on the customer data from these HCI activities in prerelease and postrelease or maintenance phases, the product team will have up-to-date and accurate information about customer satisfaction and any problems or unmet needs regarding the usability and functionality of the product. The methods described in this section may be used in parallel to collect user data on issues with the system and new emerging requirements. As mentioned previously, it would be best to cross-validate user issues and new requirements through communication with users through multiple channels. If possible, periodic user group meetings (virtual or face-to-face) supplemented with user data from one or two other channels would be very valuable for the product team to have access to.

With the overview of usability in the software and hardware life cycle as context, we turn next to an examination of two case studies where HCI work was and is part of the life cycle (references to activities described earlier are shown in italics). Here we identify the variety of positive impacts that the work has provided. The first case study concerns HCI work on a security application, and the second case study describes HCI activities in a two-year privacy research project. The security case study describes a project where the HCI work on the system began when the project was well underway in the design and development phase. Although that security case study occurred some time ago, the fundamental lessons and flow of the significant HCI work on the project still remain true today. In the privacy technology case study, an example is provided of system development where HCI is incorporated as a critical component of the project beginning in the requirements phase.

Clare-Marie Karat was the HCI lead on the development of a security application in 1989. She provides a firsthand account of the process she used to understand the user requirements and improve the usability of this product.[4]

The project’s goal was to improve the dialog of a large data entry and inquiry mainframe application used by 23,000 end users (IBM employees in branch offices across the U.S.). This large mainframe application was composed of many subapplications. The goal was to eliminate the recurring need for security authorization while performing discrete but related tasks that composed a business process. However, the project had entered the design and development phase: coding had already begun. So, before I actually joined the team, the project manager and I agreed upon the approach that I would use. I would quickly complete the HCI activities that should have been done in the requirements phase, then move ahead with the design and development phase HCI work.

Prior to the development of this security application, working with the mainframe application was like having a series of disconnected conversations for the end user. Every time a user wanted to perform a transaction, he had to re-identify, provide a password, select the appropriate application, and type a command known as a mnemonic that indicated the type of transaction to be performed. Further, the use of the actual mnemonics was controlled: users were only told about the mnemonics relevant to their individual job responsibilities and were instructed not to write down the mnemonics or share them with anyone.

I met with the project team to understand the goals of the project and define the overall usability goal. They were ambitious. The old security application was to be taken down on a Friday, the new application was to be installed over the weekend, and employees needed to be able to walk up and use the new security application the next Monday. The transition had to be smooth, without disrupting the end users or business. End users signed on to the target mainframe application about a dozen times a day.

After interviewing key team members, I drafted the measurable usability objectives for the security application and discussed them with the team during a regular status meeting. Consensus was reached that the usability objective for the security application was that “95% of the users will complete the sign-on task error-free within the first three attempts at the task.” No specific usability objective was set for the time to complete the sign-on task itself; however, the team members thought users ought to be able to complete it within about 8 seconds.

I went into the field (a field study of end-user work context) to several branch offices and observed the general workplace setting and the way the employees worked with the current system. I met with and talked to a number of the target users, collected necessary information, and was able to create the user profile definition. The users had an average of two years of work experience in their current jobs, and five years overall with IBM. They all had college degrees, good computer skills, and reasonable experience with the major business applications they used.

I observed their work environment and the transactions they needed to complete on the mainframe application. These users accessed the application to complete a variety of tasks and transactions related to customer relationship management, sales, and fulfillment. Employees in the branch offices were working at close quarters in open office settings with no partitions. Phones rang constantly; people carried on conversations. When sales were completed, big bells were rung to celebrate. It was a busy, exciting, fast-paced, and sometimes rather loud environment in which to work. The end users were typically attending to their terminal screens while juggling a variety of other tasks simultaneously, including communicating with other staff and customers through telephone calls and talking to people stopping by and requesting information. It was clear that the user interface to the security application needed to take these social and physical environment factors into account in the end-user requirements for the user interface and interaction methods for the security application.

Interviews and observation of the employees identified that the number of mnemonics that they needed to remember (for some employees, this number approached 60, with an average in the high 30s) was more than the human memory can handle. As might be expected, the users had devised their own methods of keeping track of the mnemonics they needed for their tasks. I conducted a task analysis to identify the key security sign-on tasks the users completed related to the various transactions they worked on each day, and then defined the set of core user scenarios that the security application would have to handle. These scenarios also identified a number of end-user requirements.

Over the course of the next three months, I completed three iterations of design and usability testing of the security application’s proposed user interface. Iterative testing provides an opportunity to test the impact of design changes made to the interface.[5] The initial test was a field test designed to collect data in the context of the work environment and included 5 participants. The second test was a laboratory prototype test involving 10 participants, and the third test was a laboratory integration test of the live code on a test system with 12 participants. The same set of tasks was used in all three tests. (In the examples that follow, the system and applications have been de-identified and the generic mnemonic “CMINQ” is used.)

For the first test, I built a low-fidelity prototype using the user interface design (the initial design development) that the project team had developed before I joined the effort. The team was very confident that it would be fine and that the users would be able to complete tasks using this design in a few seconds. The low-fidelity prototype was constructed using screenshots that I printed and covered with reusable clear laminate so that the users could write with washable pens what they would normally type into the entry fields. The reusable “paper” prototype provided a realistic approximation of the interface screens and navigation and was developed in 20% of the time that an online prototype would have required. The participants in the usability test completed a set of four sign-on tasks that covered different aspects of the changes to the sign-on process. For example, a sign-on task was as follows: “Please sign on to the XXX system to perform an authorized inquiry function (e.g., CMINQ) on the XXX application.”

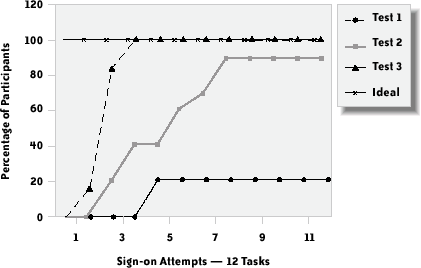

Because the usability objective concerned the users’ ability to quickly learn to use the new system, all three usability tests were designed to measure learning across repetition of the same task. Therefore, the participants completed the set of four typical sign-on tasks three times (called three trials). The sets of tasks were presented in random order in each trial. The same quantitative performance and qualitative opinion measures were collected in all three usability tests. The quantitative data included participant time on task, task completion rate (regardless of the number of errors), and cumulative error-free rate across participants. The qualitative data was collected through use of a debriefing questionnaire that captured user comments on needed changes, what was confusing, and a forced-choice End User Sign-Off rating (a measure I created) about whether the product they had worked with was good enough to install without any changes.

As a way of educating the project team, I invited the lead programmer to work with me in conducting the field test (Test 1). We worked with one participant at a time. I introduced the participant to the purpose of the usability session, and then each participant attempted to perform the three trials of four sign-on tasks, completing a total of 12 tasks (see Figure 4-1). At the end of the third trial, the participant completed a debriefing conversation with me. During the sessions, the lead programmer sat to the side of the participants and unobtrusively completed the stopwatch timing of the participants’ performances on the tasks while I ran the session and acted as the “computer” by replacing the screen on which the participants entered their text with the one they would see next on their computer screen based on their previous input. We were able to quickly move through the branch office and collect the session data, completing the field usability test in about 25% of the time a laboratory test would have required because of the time necessary to recruit and schedule participants to come to the usability lab.

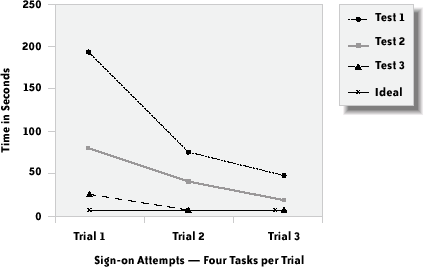

I presented the results to the development team as well as the managers of associated technical and operational areas who were invited to attend the meeting. The results were shocking to those present. Only 20% of the participants (one person) could complete the sign-on task error-free. The average time on task was over 3 minutes in Trial 1, with learning improved to 48 seconds in Trial 3 (see Figure 4-2 and 4-3). Not one of the participants thought the product was good enough to install without changes. The senior managers in attendance started talking with each other about setting up a help desk for three months to handle user questions from across the country as the system was rolled out. Nobody had the funds for a help desk in their budget, though, and the conversation became heated. The team was stunned; they had been fully prepared to implement their initial user interface design as the final design for the system.

I spoke up and said that I had recommendations for how we could resolve the usability problems and eliminate the need for a help desk. During the session debriefs, the participants talked about how they were typically multitasking, described the rote manner in which they worked at the terminals, and stated that the system needed to accommodate

Figure 4-1. Reenactment of the field usability test of the low-fidelity prototype of the security system with a target user

their need to work in this type of atmosphere. I had analyzed all the data and identified error patterns that were the most serious for us to address using the Problem Severity Classification Matrix (PSC). The PSC matrix provides a ranking of usability problems by severity and is used to determine allocation of resources for addressing user interface issues. The PSC ratings are computed on a two-dimensional scale, where one axis represents the impact of the usability problem on the user’s ability to complete a task, and the other axis represents the frequency of occurrence as defined by the percentage of users who experienced the problem. Ratings range from 1 to 3, where 1 is most severe.[6] The developers were comfortable with the PSC matrix and ratings as they were used to classifying functional problems in the code in this manner.

I made four recommendations for changes to resolve the severity 1 and 2 level end-user usability issues. The redesign work included improving the clarity of information displayed on screens and its visibility, providing content in error messages that enabled users to recover gracefully from these situations, and simplifying two types of navigational complexity. Three were quickly implemented in the system code by development staff, and a new redesigned low-fidelity prototype with these design changes was tested in Test 2. The fourth recommendation could not be implemented at that time, as the team could not determine a technically feasible way to address the navigational problem.

I redesigned the low-fidelity prototype and ran Test 2 in the usability laboratory at the development site. The usability laboratory consists of a control room and a usability studio. There is a wall with a one-way mirror between the two rooms. The development team was very engaged in the usability test and took turns watching from the control room (so that the users were not disturbed) while I ran the sessions in the studio with the participants. The extra time necessary to recruit and schedule participants was offset by the significant value to the development team in their education about usability and user interface design.

The results of Test 2 were very encouraging; 90% of the participants signed on correctly after seven attempts. This was close to the 95% level specified in the usability objective, but the number of learning attempts required to achieve that level remained unacceptable. The average (median) time on task for participants in Test 2 was less than half of what it was in Test 1, and ranged from about 80 seconds in Trial 1 to about 20 seconds in Trial 3. These times were well above the project team’s goal of 8 seconds.

To understand the ideal time on task that was possible with the prototype, we had an employee who had expertise in the security system and the branch office applications complete the usability test. This expert completed each task error-free and in 6 seconds. Clearly, improvement was possible.

When asked, 60% of the Test 2 participants thought the security system was good enough to install without any changes. While the team was heartened by the improvement in the usability of the security system, the low participant opinion scores in Test 2 pushed the team to find a solution to the remaining usability problem.

One severe usability problem that had been identified in Test 1 reoccurred in Test 2. This problem involved a complicated navigational flow where users navigated down two different paths depending on the mnemonic chosen at sign-on. One path was clear to the users. The second path was confusing and could not be addressed at the end of Test 1 because of technical problems. At the end of Test 2, however, the project architect, who had been thinking about the problem since the end of Test 1, created a simple and innovative solution to finesse the situation, and it was implemented. The solution involved the creation of a bridge that replaced a central navigational fork. This change both simplified and standardized the navigational flow. All users could utilize the bridge regardless of the mnemonic chosen at sign-on. The complexity in the security was transparent to users as, after authenticating with a user ID and password, they now saw only the types of transactions they were authorized to make.

For Test 3, I ran the usability sessions in the lab, and the participants worked with the live code on a test system. In parallel, the project team was conducting the functional testing of the system. The solution implemented for the remaining major usability problem in Test 2 worked very well. In Test 3, 100% of the participants signed on error-free after the third attempt. The user performance met the usability objective set at the beginning of the project. User time on task ranged from 24 seconds to 7 seconds in Test 3, nearly meeting the ideal performance measure. In Test 3, 100% of the participants thought the new security system was good enough to install without any changes. The solution implemented at the end of Test 2 created one minor usability problem in Test 3, and this issue was resolved prior to the rollout of the code to the branches.

The system was rolled out to the branches on time, under budget, and with high user satisfaction. Data analysis of support calls regarding the product and collection and analysis of survey data from users documented that the system was very usable and that the users were delighted not to have to remember or keep track of all of the individual mnemonics anymore. The mnemonics for related business application tasks were now grouped under one master mnemonic. The security application simplified the sign-on process and provided the users the seamless environment across transactions that they needed to complete to provide high-quality service to IBM customers while maintaining the security of sensitive data. The architectural changes—the design and layout of the user interface, navigation, and error messages—all contributed to a simplification of the security system for the users in the branch offices.

The collaboration between the HCI lead and the security technical staff was essential in creating the final design. The success in designing and deploying the new security application and the resulting return on investment was achieved through the partnership between the HCI lead and the other team members on the team goal to create a usable and effective security solution. The simple cost-benefit ratio analysis provides clear data on the value of usability in the development of a security application.[7]

Postrelease costs were also significantly lower than would normally be anticipated. There were no change requests after installation, and no updates were required to the code. These achievements were unusual and they were rewarded by the organization. The product manager communicated the project results up the management chain. The entire project team was awarded bonuses for the high-quality deliverable. The division senior executive called together the team of senior product managers and reviewed the positive outcome that was the result of the inclusion of HCI work in the development project. Usability became a critical path in project planning and execution. A number of the developers became very skilled practitioners in this field. One of my colleagues from that initial experience is the manager of an HCI consulting group within the company, and we remain in regular contact.

Before I joined the project team, they had planned to implement the initial design for the system (the one evaluated in Test 1). I analyzed the cost benefit of the usability work by simply calculating the financial value of the improvement in user productivity on the security tasks for the end-user population for the first three sign-on attempts on the security system after release.[8] , [9] Then the value of the increased productivity was compared to the cost of the HCI activities on the project. This resulted in a 1:2 cost-benefit ratio; for every dollar invested in usability, the organization gained two back. With the inclusion of cost savings based on further sign-on attempts, the savings related to the greatly reduced disruption of the users, and the reduced burden on the help desk staff, the ratio became 1:10.

This security application case study described a fairly small and well-defined effort: the project goals were clear, the target users were easily identified and accessible for usability design and evaluation work, and the functionality was designed and implemented using existing technology. The second case study, described in the next section, provides an overview of a project with a greater amount of complexity in the goals of the privacy tools, the target users, the context of use of the privacy functionality to be designed, and the use of innovative technology that needs to integrate and work smoothly with legacy systems.

In 2002, we became involved in a research project concerned with the development of approaches for helping organizations protect the personal information (PI) that they used and stored about their customers, constituents, patients, and/or employees. Most organizations store PI in heterogeneous server system environments. In our initial review of the literature and interview research, we found that organizations do not currently have a unified way of defining or implementing privacy policies that encompass data collected and used across different server platforms.[10] This makes it difficult for the organizations to put in place proper management and control of PI that allows the data users to access and work with the PI inline with organizational privacy policies. Our task was to collect data to help us understand the problem and propose solutions that would meet the needs of organizations for privacy policy management.

This work involved a wide range of user-centered techniques (discussed earlier in the chapter). This project differs from the security application presented earlier in at least three important ways; it therefore highlights the application of different usability and evaluation techniques to the creation of a privacy application:

The case study involves the creation and use of new software technologies rather than the addition of an incremental improvement to a system using existing technology.

The targeted user community is broader and includes different user groups across a variety of domains and geographies.

The solution requires integration with the organization’s heterogeneous configurations, including valuable legacy applications.

To create technology to support all aspects of organizational development and use of privacy policies, we needed to understand the concerns of a large and diverse user group. The research included four steps:

Identify the privacy needs within organizations through email survey questionnaires.

Refine the needs through in-depth interviews with privacy-responsible individuals in organizations.

Design and validate a prototype of a technological approach to meeting organizational privacy needs through onsite scenario-based walkthroughs with target users.

Collect empirical data in a controlled usability laboratory test to understand the usability of privacy policy-authoring methods included in our proposed design.

We briefly describe these activities in the following sections, and provide a reference to a more complete description of this ongoing work.

Our initial work involved gathering data from people about their needs related to privacy-enabling technology. Interviews and surveys are generally appropriate methods for this stage of a project. We completed the initial interview research in two steps:

An email survey of 51 participants to identify key privacy concerns and technology needs, and

In-depth interviews with a subset of 13 participants from the original sample to understand their top privacy concerns and technology needs in the context of scenarios about the flow of PI through business processes used by their organizations

The goal of both of these activities was to create an initial draft of the requirements definition and the user profile definition. For the first step, we recruited participants from industry and government organizations who were identified as being concerned with privacy issues. These participants and their organizations represent early technology adopters concerning privacy, and we welcomed their assistance in the early identification of issues and requirements for product development. They were recruited through a variety of mechanisms including follow-ups on attendance at privacy breakout sessions at professional conferences and referrals from members of the emerging international professional privacy community.

The email survey was designed to give us an initial view of the privacy-related problems and concerns that are faced by organizations. We recognized that our target user group was made up of people with highly responsible jobs and very busy schedules, so our email survey consisted of three questions carefully chosen to help us understand their perceptions of how their organizations handled privacy issues. These were:

What are your top privacy concerns regarding your organization?

What types of privacy functionality would you like to have available to address your privacy concerns regarding your organization?

At this time, what action is your organization taking to address the top privacy concerns you listed above?

Participants were asked to rank-order their top three choices on questions 1 and 2 from a list of choices provided, and were also allowed to fill in and rank “Other” choices if their concern was not represented in the list. For question 3, they could choose as many options as were appropriate, or choose “Other” and explain the approaches to privacy their organizations were currently using.

From these questions, we learned that there were considerable similarities in organizations across the different geographies. We also learned that privacy protection of information held by organizations was crucial for both industry and government, although their concerns were slightly different. Both segments were concerned about misuse of PI data by internal employees, as well as by external users (hackers), the protection of PI data in legacy applications, and, for industry, the economic harm that a publicized privacy breach could have on their brand.[11] The top-ranked privacy functionality desired included:

“One integrated solution for legacy and web data”

“Application-specific and usable privacy policy authoring, implementation, auditing, and enforcement”

“The ability to associate privacy policy information with individual data elements in a customer’s file”

“Privacy protection for data stored on servers from IT staff with no need to view data content”

The survey also gave us a picture of activities underway in organizations to address their needs. All of this data helped give us a picture of where to focus our efforts so that they might have the biggest impact in meeting user needs.

Our second step was to conduct in-depth interviews with target users. The goals of the interviews were to build a deeper understanding of the participants’ and their organizations’ views regarding privacy, their privacy concerns, and the value they perceived in the desired privacy technology they spoke of in the context of scenarios of use involving PI in their organizations. We find that having users develop and describe scenarios of use is highly effective at this stage. The majority of the interview sessions were centered on discussion of such a scenario provided by the respondent describing PI information flows in their organization.

We wanted to identify and understand examples of how PI flowed through business processes in the organization, the strengths and weaknesses of these processes involving PI, the manual and automated processes to address privacy, and the additional privacy functionality they need in the context of these scenarios. We used these to create the set of core task scenarios used for the rest of the project. As in Step One, we chose the participants so that they represented a range of industries, geographies, and roles. All of the interviews were completed by telephone and lasted about an hour. The team transcribed their interview notes and discussed and annotated them to create one unified view of each session. The interviews produced large amounts of qualitative data that was analyzed using contextual analysis.[12]

In their scenarios about the flow of PI through the organizational business processes, the interviewees shared a view that privacy protection of PI depends on the people, the business processes, and the technology to support them. As the interviewees looked to develop or acquire technology to automate the creation, enforcement, and auditing of privacy policies, they noted a number of concerns at different points in the scenario—from authoring policies to finding and deleting PI data. We noted that there are large gaps between needs and the ability of technology to meet them, particularly concerning the ability to perform privacy compliance checking.

Using the information from the interviews, we created a set of core task scenarios for key domains (banking/finance, health care, and government) and updated the user profile definition and user requirements definition. We reviewed these scenarios with a subset of our participants as well as a new set of target users to confirm that the scenarios correctly captured the requirements that we had identified. We used the user requirements definitions, user profile definitions, and core task scenarios that resulted from the survey and interview research in these two steps to guide the development of the privacy management prototype in Step Three.

Using the completed survey and interview research, the team defined and prioritized a set of requirements for privacy-enabling tools. There were many needs that customers identified, and we decided to focus our work on providing tools to assist in several aspects of privacy policy management. We call our prototype of a privacy policy management tool SPARCLE (Server Privacy ARchitecture and CapabiLity Enablement). The overall goal in designing SPARCLE was to provide organizations with tools to help them create understandable privacy policies, link their written privacy policies with the implementation of the policy across their IT configurations, and then help them to monitor the enforcement of the policy through internal audits. The project goals at this point were to determine if the privacy functionality that had been identified for inclusion in SPARCLE from Steps 1 and 2 met the users’ needs, identify any new requirements, determine the effectiveness of the interaction methods for the targeted user community, and gain contextual information to inform the lower-level design of the tool.

We started with design sketches and iterated through low-fidelity to medium-fidelity prototypes, which we evaluated first with internal stakeholders and then with target customers. The first step was to sketch a set of task-based screens on paper and to discuss the flow and possible functionality associated with the task and how that should be represented in the screens. Once the team came to an agreement on these rough drawings, we proceeded to create a low-level prototype that consisted of a set of HTML screens that illustrated the steps in the user scenarios. The prototype described how two fictional privacy and compliance officers within a health care organization, Pat User and Terry Specialist, used the prototype to author and implement the privacy policies and to audit the privacy policies logs. This version of the prototype was reviewed by the team and by other HCI and security researchers internally in our organization. Based on their comments, the prototype design was updated, and it was upgraded to a medium-fidelity-level prototype by the inclusion of dynamic HTML.

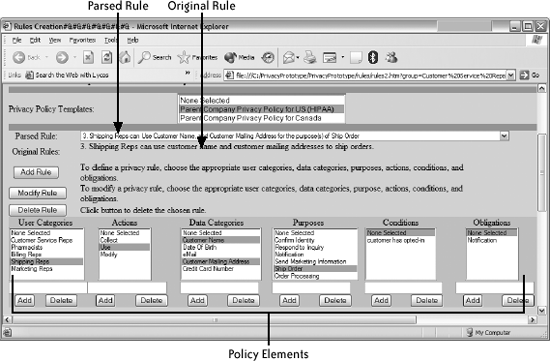

The prototype has many aspects that we will not try to describe here. To illustrate one— the use of natural language parsing to author privacy policies—we briefly describe here how it became a part of our design. During the survey and interview research, many of the participants indicated that privacy policies in their organizations were created by committees made up of business process specialists, lawyers, security specialists, and information technologists. Based on the range of skills generally possessed by people with these varied roles, we hypothesized that different methods of defining privacy policies would be necessary. SPARCLE was designed to support users with a variety of skills by allowing individuals responsible for the creation of privacy policies to define the policies using natural language with a template to describe privacy rules (shown in Figure 4-4) or to use a structured format to define the elements and rule relationships that will be directly used in the machine-readable policy (shown in Figure 4-5). We designed SPARCLE to keep the two formats synchronized. For users who prefer authoring with natural language, SPARCLE transforms the policy into a structured form so that the author can review it, and then translates it into a machine-readable format such as XA/CML, EPAL,[13] or P3P.[14] SPARCLE translates the policies of organizational users who prefer to author rules using a structured format into both a natural language format and the machine-readable version. During the entire privacy policy authoring phase, users can switch between the natural language and structured views of the policy for viewing and editing purposes.

Once the machine-readable policy is created, SPARCLE provides a series of mapping steps to allow the user to identify where in the organization’s data stores the elements of the privacy policy are stored. This series of tasks will most likely be completed by different roles in the information technology (IT) area with input from the business process experts for different lines within the organization. Finally, SPARCLE provides internal compliance or auditing officers with the ability to create reports based on logs of accesses to PI.

At the same time that our iterative design was occurring, the team worked to recrui representatives from the three selected domains of health care, banking/finance, and government who would be willing to participate in design feedback sessions to evaluate the design. Our target user group was representatives from organizations in Canada, the U.S., and Europe who are responsible for creating, implementing, and auditing the privacy policies for their organizations. Note that it is a significant effort to recruit study participants, especially when the target user group is very specialized and has very limited time. We identified these individuals using a combination of methods including issuing a call for participation at a privacy session held at an industry conference, contacting and getting

referrals from participants from the earlier studies, and employing professional and personal contacts within our department to identify potential participants.

For the review sessions, we scheduled 90-minute privacy walkthrough sessions onsite at the participants’ work locations. For the first iteration of the prototype, walkthrough participants (seven participants in five sessions) rated the prototype positively (an average rating of 2.6 on a 7-point scale, with 1 indicating “highest value” and 7 indicating “no value”). During the second iteration of walkthrough sessions in which we added a capability of importing organizational or domain-specific policy templates, the participants (15 participants in 6 sessions) also rated the revised prototype very positively (an average rating of 2.5 on the same scale).

We present this summary result because it communicates the overall response to the prototype. However, the primary purpose for the sessions was to gather more qualitative responses from the participants about the value of the system to their task of managing privacy: what worked well, what was missing, and how they would like features designed.

The qualitative comments were invaluable in helping us to better understand the needs of organizational users for privacy functionality and how to update the design of the prototype to better meet those needs. Based on the comments we received, we also decided to conduct an empirical laboratory usability test of the two authoring methods described in Step Four based on the feedback.

While formal studies have become less central to usability work than they once were, they are still an important technique for investigating specific issues. We ran an empirical usability laboratory study to compare the two privacy policy authoring methods employed in the prototype. In order to provide a baseline comparison for the two methods (Natural Language with a Guide, and Structured Entry from Element Lists), we included a control condition that allowed users to enter privacy policies in any format that they were satisfied with (Unconstrained Natural Language). We ran this study internally in our organization employing a user profile describing people experienced with computers but novices in terms of creating privacy policy rules. The results will need to be validated with the target users; however, we believed that because the crux of the study was the usability of three different methods for authoring privacy rules, the use of the novice users was appropriate. It was also an extremely practical, efficient, and cost-effective strategy, as the policy experts are a rare resource, and it would be difficult or prohibitively expensive to collect this data in a laboratory setting with them.

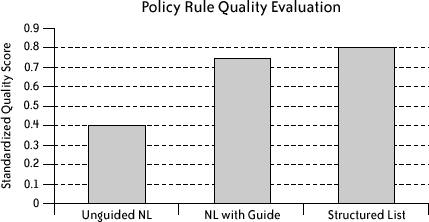

Thirty-six employees were recruited through email to participate in the study. The participants had no previous experience in privacy policy authoring or implementation. Each participant completed one task in each of the three conditions. All participants started with a privacy rule task in the Unguided Natural Language control condition (Unguided NL). Then, half of the participants completed a similar task in the Natural Language with a Guide condition (NL with Guide), followed by a third task in the Structured Entry from Element Lists condition (Structured List). The other half of the participants completed the Structured List condition followed by the NL with Guide condition.

In each task, we instructed participants to compose a number of privacy rules for a predefined task scenario. Participants worked on three different scenarios in the three tasks. We developed the scenarios in the context of health care, government, and banking. Each scenario contained five or six privacy rules, including one condition and one obligation. The order of the scenarios was balanced across all participants.

We recorded the time that the participants took to complete each task and the privacy rules that participants composed. We also collected, through questionnaires, participants’ perceived satisfaction with the task completion time, the quality of rules created, and the overall experience after participants completed each task. At the end of the session, participants completed a debrief questionnaire about their experiences with the three rule authoring methods. To compare the quality of the rules participants created under different conditions and scenarios, we developed a standard metric for scoring the rules.

Figure 4-6 shows the quality of the rules created using each method. We counted each element of a rule as one point. Therefore, a basic rule of four compulsory elements had a score of 4, and a scenario that consisted of five rules, including one condition and one obligation, had a total score of 22. We counted the number of correct elements that participants specified in their rules, and divided that number by the total score of the specific scenario. This provided a standardized score of the percentage of elements correctly identified that was compared across conditions.

The NL with Guide method and the Structured List method helped users create rules of significantly higher quality than did the Unguided NL method. There was no significant difference between the NL with Guide method and the Structured List method. Using the Unguided NL method, participants correctly identified about 42% of the elements in the scenarios, while the NL with Guide method and the Structured List method helped users to correctly identify 75% and 80% of the elements, respectively.

The results of the experiment confirmed that both the NL with Guide method and the Structured List method were easy to learn and use, and they enabled participants to create rules with higher quality than did the Unguided NL method. The participants’ satisfaction with both the NL with Guide method and the Structured List method provided some indication that there is value in allowing users to choose between the two methods and switch between them for different parts of the task, depending on their own preferences and skills. Other feedback from the study gave us additional guidance on where to focus on our future iterations of design.

Given these results, we believe that SPARCLE is now ready to be transformed into a high-fidelity end-to-end prototype so that we can test its functionality more completely with

privacy professionals. While we have used predefined scenarios with predefined policies for our evaluations so far, a prototype that has been integrated with a fully functional natural language parser and a privacy enforcement engine will enable us to conduct richer and more complete tests of functionality with the participants’ organizational privacy policies before moving to product-level code.

Our experiences at IBM show that HCI activities can have a positive return both for the organization creating a piece of technology and for the people who actually use it.

We encourage you to start integrating HCI activities in your development activities in the security and privacy domain. It is helpful to start with a small to medium-size project or product as a test case and learning vehicle. Bring an HCI expert on board, and leverage this person’s skill and build expertise in the larger team. Hold team discussions so that you can build on what you learn in this area within your organization and in your professional associations. Communicate your successes and failures so that people within and outside your organization can benefit from your experiences.

Clare-Marie Karat is a Research Staff Member at the IBM T.J. Watson Research Center. Dr. Karat conducts HCI research in the areas of privacy, security, and personalization. She is the editor of the book Designing Personalized User Experiences in eCommerce, published in 2004 (Springer). She is an editorial board member of the ACM interactions, the British Computer Society’s Interacting with Computers, and Elsevier’s International Journal of Human Computer Studies journals; a reviewer for the IEEE Security and Privacy journal; and a technical committee member of the CHI, HFES, and INTERACT conferences, the Symposium on Usable Privacy and Security, and the User Modeling Personalization and Privacy Workshop.

Carolyn Brodie is a Research Staff Member at IBM’s T.J. Watson Research Center. Dr. Brodie’s current research focuses on the design and development of usable privacy and security functionality for organizations. She received her Ph.D. from the University of Illinois at Urbana-Champaign in Computer Science in 1999, where she developed a methodology for the design of military planning tools. Additional research interests include the personalization of web sites, and the use of collaboration tools to enhance information flow in organizations.

John Karat is a Research Staff Member at the IBM T.J. Watson Research Center. Dr. Karat conducts HCI research in a variety of areas including privacy, personalization, and information management. Dr. Karat is past chairman of the International Federation for Information Processing Technical Committee on HCI (IFIP TC13); North American editor for the journal Behaviour and Information Technology; and Editor-in-Chief of the Kluwer Academic Publishers series on HCI. He is a member of the ACM SIGCHI Executive Committee and has been actively involved in ACM CHI and DIS and in the IFIP INTERACT conferences in the HCI field.

| jkarat@us.ibm.com |

[1] J. Whiteside, J. Bennett, and K. Holtzblatt, “Usability Engineering: Our Experience and Evolution,” in M. Helander (ed.), Handbook of Human Computer Interaction, (Amsterdam: Elsevier, 1988).

[2] C. Karat, “A Business Case Approach to Usability Cost-Justification for the Web,” in R. Bias and D. Mayhew (eds.), Cost-Justifying Usability (London: Academic Press, 2005).

[3] C. Karat, R. Campbell, and T. Fiegel, “Comparison of Empirical Testing and Walkthrough Methods in User Interface Evaluation,” Human Factors in Computing Systems—CHI 92 Conference Proceedings (New York: ACM, 1992), 397–404.

[4] C. Karat, “Iterative Usability Testing of a Security Application,” in Proceedings of the Human Factors Society (1989), 272–277.

[5] J. Gould, “How to Design a Usable System,” in M. Helander (ed.), Handbook of Human Computer Interaction (Amsterdam: Elsevier, 1988).

[6] C. Karat, R. Campbell, and T. Fiegel, 397-404.

[7] For more information on calculating the cost benefit of usability, see ibid and R. Bias, Cost-Justifying Usability: An Update for the Internet Age, 2nd Edition (London: Academic Press, 2005).

[8] C. Karat, “Cost-Benefit Analysis of Usability Engineering Techniques,” Proceedings of the Human Factors Society, 839-843.

[9] C. Karat, 2005.

[10] A. Anton, Q. He, and D. Baumer, “The Complexity Underlying JetBlue’s Privacy Policy Violations,” IEEE Security and Privacy (Aug./Sept. 2004).

[11] J. Karat, C. Karat, C. Brodie, and H. Feng, “Privacy in Information Technology: Designing to Enable Privacy Policy Management in Organizations,” International Journal of Human Computer Studies (2005), 153–174.

[12] H. Beyer and K. Holtzblatt, Contextual Design (New York: Morgan Kaufmann, 1997).

[13] P. Ashley, S. Hada, G. Karjoth, C. Powers, and M. Schunter, Enterprise Privacy Architecture Language (EPAL 1.2), W3C Member Submission 10-Nov-2003; http://www.w3.org/Submission/EPAL/.

[14] L. Cranor, Web Privacy with P3P (Sebastopol, CA: O’Reilly, 2002).

Get Security and Usability now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.