Present-day information systems have became so complex that troubleshooting them effectively necessitates real-time performance, data presented at fine granularity, a thorough understanding of data interpretation, and a pinch of skill. The time when you could trace failure to a few possible causes is long gone. Availability standards in the industry remain high and are pushed ever further. The systems must be equipped with powerful instrumentation, otherwise lack of information will lead to loss of time and—in some cases—loss of revenue.

Monitoring empowers operators to catch complications before they develop into problems, and helps you preserve high availability and deliver high quality of service. It also assists you in making informed decisions about the present and the future, serves as input to automation of infrastructures and, most importantly, is an indispensable learning tool.

Monitoring has become an umbrella term whose meaning strongly depends on the context. Most broadly, it refers to the process of becoming aware of the state of a system. This is done in two ways, proactive and reactive. The former involves watching visual indicators, such as timeseries and dashboards, and is sometimes what administrators mean by monitoring. The latter involves automated ways to deliver notifications to operators in order to bring to their attention a grave change in system’s state; this is usually referred to as alerting.

But the ambiguity doesn’t end there. Look around on forums and mailing lists and you’ll realize that some people use the term monitoring to refer to the process of measurement, which might not necessarily involve any human interaction. I’m sure my definitions here are not exhaustive. The point is that, when you read about monitoring, it is useful to discern as early as possible what process the writer is actually talking about.

Some goals of monitoring are more obvious than others. To demonstrate its full potential, let me point out the most common use cases, which are connected to overseeing data flow and the process of change in your system.

Speedy detection of threatening issues is by far the most important objective of monitoring and is the function of the alerting part of the system. The difficulty consists of pursuing two conflicting goals: speed and accuracy. I want to know when something is not right and I want to know about it fast. I do not, however, want to get alarmed because of temporary blips and transient issues of negligible impact. Behind every reasonable threshold value lurks a risk for potentially disastrous issues slipping under the radar. This is precisely why setting up alarms manually is very hard and speculating about the right threshold levels in meetings can be exhausting, frustrating, and unproductive. The goal of effective alerting is to minimize the hazards.

In the business of availability, downtime is a dreaded term. It happens when the system is subject to full loss of availability. Availability loss can also be partial, or unavailable only for a portion of users. The key is early detection and prevention in busy production environments.

Downtime usually translates directly to losses in revenue. A complete monitoring setup that allows for timely identification of issues proves indispensable. Ideally, monitoring tools should enable operators to drill down from a high-level overview into the fine levels of detail, granular enough to point at specifics used in analysis and identification of a root cause.

The root cause establishes the real reason (and its many possible factors) behind the fault. The subsequent corrective action builds upon the findings from root cause analysis and is carried out to prevent future occurrences of the problem. Fixing the most superficial problem only (or proximate cause) guarantees recurrence of the same faults in the long run.

Paying close attention to anomalous behavior in the system help to detect resource saturation and rare defects. A number of faults get by Quality Assurance (QA), are hard to account for, and are likely to surface only after long hours of regression testing. A peculiar group of rare bugs emerge exclusively at large scale when exposed to extremely heavy system load. Although hard to isolate in test environments, they are consistently reproducible in production. And once they are located through scrupulous monitoring, they are easier to identify and eliminate.

Operators develop a strong intuition about shifts in utilization patterns. The ability to discern anomalies from visual plots is a big part of their job knowledge. Sometimes operators must make decisions quickly, and in critical situations, knowing your system well can reduce blunders and improve your chances for successful mitigation. Other times, intuition leads to unfounded assumptions and acting on them may lead to catastrophic outcomes. Comprehensive monitoring helps you verify wild guesses and gut feelings.

Monitoring provides an immediate insight into a system’s current state. This data often takes quantitative form and, when recorded on timeseries, become a rich source of information for baselining.

Establishing standard performance levels is an important part of your job. It finds application in capacity planning, leads to formulation of data-backed Service-Level Agreements (SLAs) and, where inconsistencies are detected, can be a starting point for in-depth performance analysis.

In the context of monitoring, a prediction is a quantitative forecast containing a degree of uncertainty about future levels of resources or phenomena leading to their utilization. Monitoring traffic and usage patterns over time serves as a source of information for decision support. It can help you predict what normal traffic levels are during peaks and troughs, holidays, and key periods such as major global sporting events. When the usage patterns trend outside the projected limits, there probably is a good reason for it, even if this reason is not directly dependent on the system’s operation. For instance, traffic patterns that drop below 20% of the expected values for an extended period might stem from a portion of customers experiencing difficulties with their ISPs. Some Internet giants are able to conclusively narrow down the source of external failure and proactively help ISPs identify and mitigate against faults.

On top of predicting future workload, close interaction with monitoring may help predict business trends. Customers may have different needs at different times of the year. The ability to predict demands and then match them based on seasonality translates directly into revenue gains.

Metrics are a source of quantitative information, and the evaluation of an alarm state results in a boolean yes-no answer to the simple question: is the monitored value within expected limits? This has important implications for the automation of processes, especially those involving admission control, pause of operation, and estimations based on real-time data.

Bursts of input may saturate a system’s capacity and it may have to drop some traffic. In order to prevent uniformly bad experience for all users an attempt is made to reject a portion of inputs. This is commonly known as admission control and its objective is to defend against thrashing that severely denigrates performance.

Some implementations of admission control are known as the Big Red Button (BRB), as they require a human engineer to intervene and press it. Deciding when to stop admission is inherently inefficient: such decisions are usually made too late, they often require an approval or sign-off, and there is always the danger of someone forgetting to toggle the button back to the unpressed state when the situation is back under control.

Consider the potential of using inputs from monitoring for admission control. Monitoring-enabled mechanisms go into effect immediately when the problems are first detected, allowing for gradual and local degradation before sudden and global disasters. When the problem subsides, the protecting mechanism stops without the need for human supervision.

Monitoring’s feedback loop is also central to the idea of Autonomic Computing (AC), an architecture in which the system is capable of regulating itself and thus enabling self-management and self-healing. AC was inspired by the operation of the human central nervous system. It draws an analogy between it and a complex, distributed information system. Unconscious processes, such as the control over the rate of breath, do not require human effort. The goal of AC is to minimize the need for human intervention in a similar way, by replacing it with self-regulation. Comprehensive monitoring can provide an effective means to achieve this end.

Having discussed what these processes are for, let’s move on to how they’re done. Monitoring is a continuous process, a series of steps carried out in a loop. This section outlines its workings and introduces monitoring’s fundamental building blocks.

Watching and evaluating timeseries, chronologically ordered lists of data points, is at the core of both monitoring and alerting.

Monitoring consists of recording and analyzing quantitative inputs, that is, numeric measurements carrying information about current state and its most recent changes. Each data input comes with a number of properties describing it: the origin of the measurement and its attributes such as units and time at which sampling took place.

The inputs along with their properties are stored in the form of metrics. A metric is a data structure optimized for storage and retrieval of numeric inputs. The resulting collection of gathered inputs may be interpreted in many different ways based on the values of their assigned properties. Such interpretation allows a tool to evaluate the inputs as a whole as well as at many abstract levels, from coarse to fine granularity.

Data inputs extracted from selected metrics are further agglomerated into groups based on the time the measurement occurred. The groups are assigned to uniform intervals on a time axis, and the total of inputs in each group can be summarized by use of a mathematical transformation, referred to as a summary statistic. The mathematical transformation yields one numeric data point for each time interval. The collection of data points, a timeseries, describes some statistical aspect of all inputs from a given time range. The same set of data inputs may be used to generate different data points, depending on the selection of a summary statistic.

An alarm is a piece of configuration describing a system’s change in state, most typically a highly undesirable one, through fluctuations of data points in a timeseries. Alarms are made up of metric monitors and date-time evaluations and may optionally nest other alarms.

An alert is a notification of a potential problem, which can take one or more of the following forms: email, SMS, phone call, or a ticket. An alert is issued by an alarm when the system transitions through some threshold, and this threshold breach is detected by a monitor. Thus, for example, you may configure an alarm to alert you when the system exceeds 80% of CPU utilization for a continuous period of 10 minutes.

A metric monitor is attached to a timeseries and evaluates it against a threshold. The threshold consists of limits (expressed as the number of data points) and the duration of the breach. When the arriving data points fall below the threshold, exceed the threshold, or go outside the defined range for long enough, the threshold is said to be breached and the monitor transitions from clear into alert state. When the data points fall within the limits of the defined threshold, the monitor recovers and returns to clear state. Monitor states are used as factors in the evaluation of alarm states.

A monitoring system is a set of software components that performs measurements and collects, stores, and interprets the monitored data. The system is optimized for efficient storage and prompt retrieval of monitoring metrics for visual inspection of timeseries as well as data point analysis for the purposes of alerting.

Many vendors have taken up the challenge of designing and implementing monitoring systems. A great deal of open source products are available for use and increasingly more cloud vendors offer monitoring and alerting as a service. Listing them here makes little sense as the list is very dynamic. Instead, I’ll refer you to the Wikipedia article on comparing network monitoring systems, which does a superb job comparing about 60 monitoring systems against one another and classifying each in around 17 categories based on supported features, mode of operation, and licensing.

It’s good to ask the following questions when selecting a monitoring product:

What are the fees and restrictions imposed by product’s license?

Was the solution designed with reliability and resilience in mind? If not, how much effort will go into monitoring the monitoring platform itself?

Is it capable of juxtaposing timeseries from arbitrary metrics on the same plot as needed?

Does it produce timeseries plots of fine enough granularity?

Does its alerting platform empower experienced users to create sophisticated alarms?

Does it offer an API access that lets you export gathered data for offline analysis?

How difficult is it to scale it up as your system expands?

How easily will you be able to migrate from it to another monitoring or alerting solution?

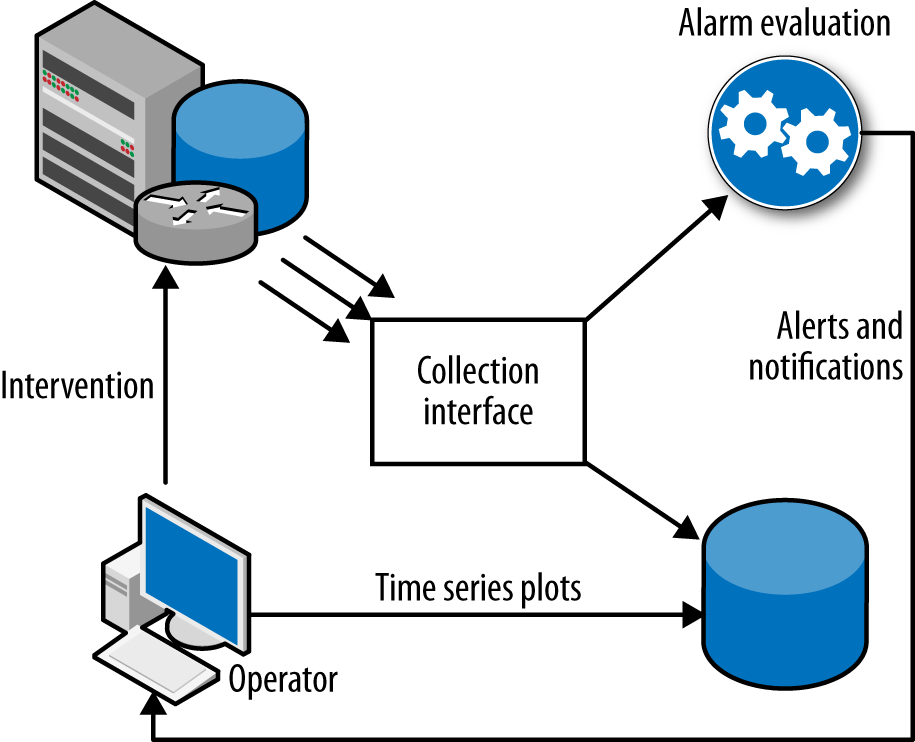

The vast majority of monitoring systems, including those listed in the article, share a similar high-level architecture and operate on very similar principles. Figure 1-1 illustrates the interactions between its components. The process starts with collection of input data. The agents gather and submit inputs to the monitoring system through its specialized write-only interface. The system stores data inputs in metrics and may submit fresh data points for evaluation of threshold breach conditions. When a threshold breach is detected, an alert may be sent to notify the operator about the fault. The operator analyzes timeseries plots and draws conclusions that lead to a mitigative action. Generally speaking, the process is broken down into three functional parts:

Data Collection

The data about system’s operations is collected by agents from servers, databases, and network equipment. The source of data are logs, device statistics, and system measurements. Collection agents group inputs into metrics and give them a set of properties that serve as an address in space and time. The inputs are later submitted to the monitoring system through an agreed-upon protocol and stored in the metrics database.

Data Aggregation and Storage

Incoming data inputs are grouped and collated by their properties and subsequently stored in their respective metrics. Data inputs are retrieved from metrics and summarized by a summary statistic to yield a timeseries. Resulting timeseries data points are submitted one by one to an alarm evaluation engine and are checked for occurrences of anomalous conditions. When such conditions are detected, an alarm goes off and dispatches an alert to the operator.

Presentation

The operator may generate timeseries plots of selected timeseries as a way of gaining an overview of the current state or in response to receiving an alert. When a fault is identified and an appropriate mitigative action is carried out, the graphs should give immediate feedback and reflect the degree to which the corrective action has helped. When no improvement is observed, further intervention may be necessary.

A monitoring system provides a point of reference for all operators. Its benefits are most pronounced in mature organizations where infrastructure teams, systems engineering, application developers and ops are enabled to interact freely, exchange observations and reassign responsibilities. Having a single point of reference for all teams significantly boosts the efficacy of detection and mitigation. Chapter 2 discusses monitoring in depth.

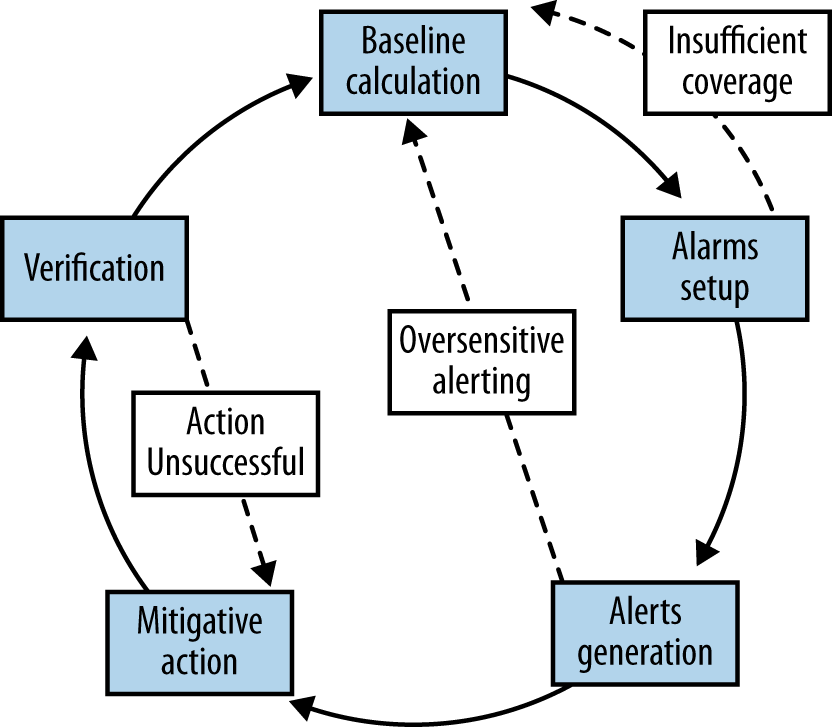

Human operators play a central role in system monitoring. The process starts with establishing the system’s baseline, that is, gathering information about the levels of performance and system behavior under normal conditions. This information serves as a starting point for the creation of an initial alerting configuration. The initial setup attempts to define abnormal conditions by defining thresholds for exceptional metric values.

Ideally, alarms should generate alerts only in response to actual defects that burden normal system operation. Unfortunately, that’s not always the case.

When the thresholds are set up too liberally, legitimate problems may not be detected in time and the system runs a greater risk of performance degradation, which in the end may lead to system downtime. When the problems are eventually discovered and mitigated, the alerting configuration ought to be tightened to prevent the recurrence of costly outages.

Alarm monitors can also be created with unnecessarily sensitive thresholds, leading to a high likelihood that an alarm will be triggered by normal system operation. In such scenarios, the alarms will generate alerts when no harm is done. Once again, the baseline should then be reevaluated and respective monitors adjusted to improve detectability of real issues.

Most alarms, however, do go off for a valid reason and identify faults that can be mitigated. When that happens, an operator investigates the problem, starting with the metric that triggered the threshold breach condition and reasoning backwards in his search for a cause. When a satisfactory explanation is found and mitigative measures are taken to put the system back in equilibrium, the metrics reflect that and the alarm transitions back into the clear state. If the metrics do not reveal any improvement, that raises questions about the effectiveness of the mitigation and an alternative action might need to be taken to combat the problem fully.

Once more, after a successful recovery, the behavior of system metrics might improve enough to warrant yet another baseline recalculation and subsequent adjustment of the alarm configuration (Figure 1-2).

An Issue Tracking System (ITS) is a database of reported problems recorded in the form of tickets. It facilitates prioritization and adequate tracking of reported problems as well as enabling the efficient collaboration between an arbitrary number of individuals and teams. Alerts often take the form of tickets, and therefore their role in prioritization and event response is very relevant to the process of alerting.

A ticket is a description of a problem with a chronological record of actions taken in attempt to resolve it.

Tickets are an extremely convenient mechanism for prioritizing incoming issues and enabling collaboration between multiple team members. They may be filed by humans or generated by automated processes, such as alarms attached to metric monitors. Either way, they are indispensable in helping to resolve problems and serve as a central point of reference for all parties involved in the resolution process. New information is appended to the ticket through updates. The most recent update reflects the latest state of the ticket. When a solution to the problem is found and applied, the ticket is archived and its state changes from “open” to “resolved.”

Every ticket comes with a title outlining symptoms of the reported problem, some more detailed description, and an assigned severity. Typically, the severity level falls into one of four or five possible categories: urgent, high, normal, low, and, optionally, trivial. Chapter 3 describes the process of selecting the right priority. Tickets also have a set of miscellaneous properties, such as information about the person making the request, as well as a set of timestamps recording creation and modification dates, which are all used in the process of reporting.

The operator dealing with tickets is expected to work on them in order of priority from most to least severe. To assist the operator, the tickets are placed in priority queues. Each ticket queue is a database query that returns a list of ticket entries sorted by a set of predefined criteria. Most commonly, the list is sorted by priority in descending order and, among priorities, by date from oldest to newest.

Depending on the structure and size of the organization, an ITS may host from one to many hundreds of ticket queues. Tickets are reassigned between queues to signal transfer of responsibility for issue resolution. A team may own a number of queues, each for a separate breed of tickets.

Tickets resolved over time are a spontaneously created body of knowledge, with valuable information about the system problems, the sources of the problems, solutions for mitigation, and the quality of work carried out by the operators in resolving the problems. Practical ticket mining techniques are described in Chapter 7.

It is commonly believed that for monitoring to be effective it has to take conscious, continuously applied effort. Monitoring is not a trivial process and there are many facets to it. Sometimes the priorities must be balanced. It is true that an ad hoc approach will often require more effort than necessary, but with good preparation monitoring can become effortless. Let’s look at some factors that make monitoring difficult.

- Baselining

The problem with baselines is not that they are hard to establish, but that they are volatile. There are few areas for which the sentence “nothing endures but change” is more valid than for information systems. Hardware gets faster, software has fewer bugs, the infrastructure becomes more reliable. Sometimes software architects trade off the use of one resource for another, other times they give up a feature to focus on the core functionality. The implication of all that on monitoring and alerting is that alarms very quickly become stale and meaningless, and their maintenance adds to the operational burden.

- Coverage

Full monitoring coverage should follow a system’s expansion and structural changes, but that’s not always the case. More commonly, the configurations are set up at the start and revisited only when it is absolutely necessary, or—worse yet—when the configurations are so out of date that real problems start getting noticed by end users. Maintaining full monitoring coverage, which is essential to detecting problems, is often neglected until it’s too late.

- Manageability

Large monitoring configurations include tens of thousands of metrics and thousands of alarms. Complex setups are not only expensive to maintain in terms of manual labor, but are also prone to human misinterpretation and oversight. Without a proper systematic approach and rich instrumentation, the configurations will keep becoming increasingly inconsistent and extremely hard to manage.

- Accuracy

Sometimes faults will remain undetected, whereas other times alarms will go off despite no immediate or eventual danger of noticeable impact. Reducing the incidence of both kinds of errors is a constant battle, often requiring decisions that might seem counterintuitive at the beginning. But this battle is far from being lost!

- Context

Monitoring’s main objective is to identify and pinpoint the source of problems in a timely manner. Time is too precious and there is not enough of it for in-depth analysis. In order for complex data to be presented efficiently, large sets of numbers must be reduced to single numeric values or classified into a finite number of buckets. As a consequence, the person observing plots must make accurate assumptions based on a thorough understanding of the underlying data, their method of collection, and their source. Where do the inputs come from? In what proportions? What is the distribution of the values? Where are the limits? Correct interpretation requires in-depth knowledge of the system, which monitoring itself does not provide.

- Human Nature

In striving for results, humans often see what they want rather than what’s actually there. All too often, important pieces of information get discarded as outliers or as having negligible impact. Operators get away with neglecting outliers most of the time, but on rare occasions, especially at a large scale, neglecting these information-rich outliers may result in a high visibility outage. In addition, humans are terrible intuitive statisticians. We are prone to setting round thresholds, with a particular fondness for powers of 10, and we easily lose the sense of proportion.

There is a fair amount of discrepancy in the use of monitoring vocabulary. Many organizations, especially those with long established culture, use specific monitoring terms interchangeably. I’d like to close this chapter with a short glossary of the most important terms used throughout the book. I hope it will help to avoid some of the confusion.

- Agent

A software process that continuously records data inputs and reports them to a monitoring system.

- Alarm

A piece of configuration describing an undesirable condition and alerts issued in response to it.

- Alert

A notification message informing about a change of state, typically signifying a potential problem.

- Alerting

The process of configuring alarms and alerts.

- Data Input

A numeric value with an accompanying set of properties gathered at the source of the measurement by a monitoring agent.

- Data Point

A numeric value summarizing one or multiple data inputs reported in a defined time interval. A series of data points makes up a timeseries.

- Metric

A collection of data inputs described by a set of properties. Timeseries are often mistakenly referred to as metrics. Monitoring metrics should not be confused with performance metrics either, which are a set of high level business performance indicators.

- Monitor

A process evaluating the most recent data points on a timeseries for threshold fit. This is an integral part of an alarm.

- Monitoring

The process of collecting and retrieving relevant data describing a change of state.

- Timeseries

A list of data points sorted in natural temporal order, most commonly presented on a plot.

Get Effective Monitoring and Alerting now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.