Chapter 4. Control Statements

Note

This chapter’s material is rich and intellectually challenging. Don’t give up if you start to feel lost (but do review it later to make sure you have absorbed it all). This chapter, together with the next, will complete our introduction to Python. To help you understand its contents, the chapter ends with some extended examples that reiterate the points made in its shorter examples. The rest of the book has a very different flavor.

Chapters 1 and 2 introduced simple statements:

Expressions, including function calls

Assignments

Augmented assignments

Various forms of import

Assertions

returnyield(to implement generators)pass

They also introduced the statements def, for defining functions, and with, to use with files.[20] These are compound statements because they require at least one indented statement after the

first line. This chapter introduces other compound statements. As with

def and with, the first line of every compound statement

must end with a colon and be followed by at least one statement indented

relative to it. Unlike def and with statements, though, the other compound

statements do not name anything. Rather, they determine the order in which

other statements are executed. That order is traditionally called the

control flow or flow of control,

and statements that affect it are called control statements.[21]

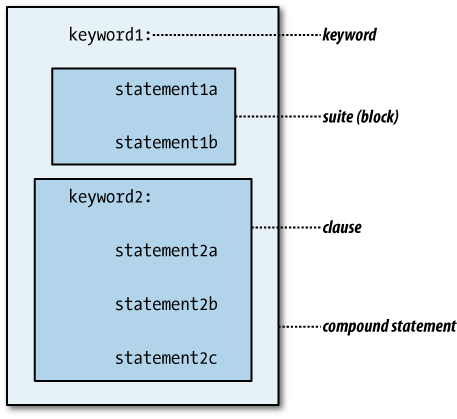

Some kinds of compound statements can or must have more than one clause. The first line of each clause of a compound statement—its header in Python terminology—is at the same level of indentation as the headers of the statement’s other clauses. Each header begins with a keyword and ends with a colon. The rest of the clause—its suite—is a series of statements indented one level more than its header.

Note

The term “suite” comes from Python’s technical documentation. We’ll

generally use the more common term block instead.

Also, when discussing compound statements, we frequently refer to clauses

by the keywords that introduce them (for example, “a with clause”).

Figure 4-1 illustrates

the structure of a multi-clause compound statement. Not all compound

statements are multi-clause, but every clause has a header and a suite

containing at least one statement (if only pass).

The statements discussed in this chapter are the scaffolding on which you will build your programs. Without them you are limited to the sort of simple computations shown in the examples of the previous chapters. The four kinds of compound statements introduced here are:

Conditionals

Loops

Iterations

Exception handlers

Note

Starting later in this chapter, some common usage patterns of Python functions, methods, and statements will be presented as abstract templates, along with examples that illustrate them. These templates are a device for demonstrating and summarizing how these constructs work while avoiding a lot of written description. They contain a mixture of Python names, self-descriptive “roles” to be replaced by real code, and occasionally some “pseudocode” that describes a part of the template in English rather than actual Python code.

The templates are in no way part of the Python language. In addition to introducing new programming constructs and techniques as you read, the templates are designed to serve as references while you work on later parts of the book and program in Python afterwards. Some of them are quite sophisticated, so it would be worth reviewing them periodically.

Conditionals

The most direct way to affect the flow of control is with a

conditional statement. Conditionals in Python are

compound statements beginning with if.

During the import of a module __name__ is bound to the name of the module, but

while the file is being executed __name__ is bound to '__main__'. This gives you a way to include

statements in your Python files that are executed only when the module is

run or, conversely, only when it is imported. The comparison of __name__ to '__main__' would almost always be done in a

conditional statement and placed at the end of the file.

A common use for this comparison is to run tests when the module is executed, but not when it is

imported for use by other code. Suppose you have a function called

do_tests that contains lots of

assignment and assertion statements that you don’t want to run when the

module is imported in normal use, but you do want to execute when the

module is executed. While informal, this is a useful technique for testing

modules you write. At the end of the file you would write:

if __name__ == '__main__':

do_tests()There are several forms of conditional statements. The next one

expresses a choice between two groups of statements and has two clauses,

an if and an else.

A simple use of the one-alternative form of conditional is to expand the test for whether a file is being imported as opposed to being executed. We can set it up so that one thing happens when the file is imported and a different thing happens when it’s executed.

This example shows only one statement in each block. There could be

others, but another reason to group statements into simple functions is so

you can invoke them “manually” in the interpreter during development and

testing. You might run do_tests, fix a

few things, then run it again. These test functions are useful whether

invoked automatically or manually:

if __name__ == '__main__':

do_tests()

else:

print(__name__, 'has been imported.')The third form of conditional statement contains more than one test.

Except for the if at the beginning, all

the test clauses are introduced by the keyword elif.

Python has a rich repertoire of mechanisms for controlling execution. Many kinds of maneuvers that would have been handled in older languages by conditionals—and could still be in Python—are better expressed using these other mechanisms. In particular, because they emphasize values rather than actions, conditional expressions or conditional comprehensions are often more appropriate than conditional statements.

Programming languages have many of the same properties as ordinary human languages. Criteria for clear writing are similar in both types of language. You want what you write to be:

Succinct

Clear

Accurate

Note

It’s important not to burden readers of your code (you included!) with too many details. People can pay attention to only a few things at once. Conditionals are a rather heavy-handed form of code that puts significant cognitive strain on the reader. With a little experience and experimentation, you should find that you don’t often need them. There will be examples of appropriate uses in the rest of this chapter, as well as in later ones. You should observe and absorb the style they suggest.

Loops

A loop is a block of statements that gets executed as long as some condition is

true. Loops are expressed in Python using while statements.

Note that the test may well be false the first time it is evaluated.

In that case, the statements of the block won’t get executed at all. If

you want some code to execute once the test is false, include an else clause in your loop.

There are two simple statements that are associated with both loops

and iterations (the subject of the next section): continue and break.

The continue statement is rarely used in Python programming, but it’s worth

mentioning it here in case you run across it while reading someone else’s

Python code. The break statement is

seen somewhat more often, but in most cases it is better to embed in the

loop’s test all the conditions that determine whether it should continue

rather than using break. Furthermore, in

many cases the loop is the last statement of a function, so you can just use a return statement to both end the loop and exit

the function. (A return exits the

function that contains it even if execution is in the middle of a

conditional or loop.) Using a return

instead of break is more convenient

when each function does just one thing: most uses of break are intended to move past the loop to

execute code that appears later in the function, and if there isn’t any

code later in the function a return

statement inside the loop is equivalent to a break.

An error that occurs during the execution of a loop’s test or one of its statements also terminates the execution of the loop. Altogether, then, there are three ways for a loop’s execution to end:

- Normally

The test evaluates to false.

- Abnormally

An error occurs in the evaluation of the test or body of the loop.

- Prematurely

The body of the loop executes a

returnorbreakstatement.

When you write a loop, you must make sure that the test expression

eventually becomes false or a break or

return is executed. Otherwise, the

program will get stuck in what is called an infinite loop. We’ll see at the end of

the chapter how to control what happens when errors occur, rather than

allowing them to cause the program to exit abnormally.

Simple Loop Examples

Example 4-1 presents the simplest possible loop, along with a function that reads a line typed by the user, prints it out, and returns it.

def echo(): """Echo the user's input until an empty line is entered""" while echo1(): pass def echo1(): """Prompt the user for a string, "echo" it, and return it""" line = input('Say something: ') print('You said', line) return line

The function echo1 reads a

line, prints it, and returns it. The function echo contains the simplest possible while statement. It calls a function

repeatedly, doing nothing (pass),

until the function returns something false. If the user just presses

Return, echo1 will print and return

an empty string. Since empty strings are false, when the while gets an empty string back from echo1 it stops. A slight variation, shown in

Example 4-2, is to compare the result returned from

echo1 to some specified value that

signals the end of the conversation.

def polite_echo(): """Echo the user's input until it equals 'bye'""" while echo1() != 'bye': pass

Of course, the bodies of loops are rarely so trivial. What allows

the loop in these examples to contain nothing but a pass is that echo1 is called both to perform an action and

to return True or False. This example uses such trivial loop

bodies only to illustrate the structure of the while statement.

Initialization of Loop Values

Example 4-3 shows a more typical loop. It records the user’s responses, and

when the user types 'bye' the

function returns a record of the input it received. The important thing

here is that it’s not enough to use echo1’s result as a test. The function also

needs to add it to a list it is building. That list is returned from the

function after the loop exits.

def recording_echo(): """Echo the user's input until it equals 'bye', then return a list of all the inputs received""" lst = [] entry = echo1() while entry != 'bye': lst.append(entry) entry = echo1() return lst

In this example, echo1 is

called in two places: once to get the first response and then each time

around the loop. Normally it is better not to repeat a piece of code in

two places, even if they are so close together. It’s easy to forget to

change one when you change the other or to make incompatible changes,

and changing the same thing in multiple places is tedious and

error-prone. Unfortunately, the kind of repetition shown in this example

is often difficult to avoid when combining input—whether from the user or from a file—with while loops.

Example 4-4 shows the same function as Example 4-3, but with comments added to emphasize the way the code uses a simple loop.

def recording_echo(): # initialize entry and lst lst = [] # get the first input entry = echo1() # test entry while entry != 'bye': # use entry lst.append(entry) # change entry entry = echo1() # repeat # return result return lst

All parts of this template are optional except for the line

beginning with while. Typically, one

or more of the values assigned in the initialization portion are used in

the loop test and changed inside the loop. In recording_echo the value of entry is initialized, tested, used, and

changed; lst is initialized, used,

and changed, but it is not part of the loop’s test.

Looping Forever

Sometimes you just want your code to repeat something until it executes a return statement. In that case there’s no need

to actually test a value. Since while

statements require a test, we use True, which is, of course, always true. This

may seem a bit odd, but there are times when something like this is

appropriate. It is often called “looping forever.” Of course, in reality

the program won’t run “forever,” but it might run forever as far as

it is concerned—that is, until something external

causes it to stop. Such programs are found frequently in operating system and server

software.

Example 4-5 shows a rewrite of Example 4-3 using the Loop Forever template. Typical loops usually get the next value at the end of the loop, but in this kind, the next value is obtained at the beginning of the loop.

def recording_echo_with_conditional(): """Echo the user's input until it equals 'bye', then return a list of all the inputs received""" seq = [] # no need to initialize a value to be tested since nothing is tested! while True: entry = echo1() if entry == 'bye': return seq seq.append(entry)

Loops over generators are always effectively “forever” in that

there’s no way to know how many items the generator will produce. The

program must call next over and over

again until the generator is exhausted. We saw in Chapter 3 (in Generators) that the

generator argument of next can be

followed by a value to return when the generator is exhausted. A

“forever” loop can be written to use this feature in a function that

combines all of the generated amino acid abbreviations into a string.

Example 4-6 repeats the

definition of the generator function and shows the definition of a new

function that uses it.

def aa_generator(rnaseq): """Return a generator object that produces an amino acid by translating the next three characters of rnaseq each time next is called on it""" return (translate_RNA_codon(rnaseq[n:n+3]) for n in range(0, len(rnaseq), 3)) def translate(rnaseq): """Translate rnaseq into amino acid symbols""" gen = aa_generator(rnaseq) seq = '' aa = next(gen, None) while aa: seq += aa aa = next(gen, None) return seq

Loops with Guard Conditions

Loops are often used to search for a value that meets the test condition when there is no guarantee that one does. In such situations it is not enough to just test each value—when there are no more values, the test would be repeated indefinitely. A second conditional expression must be added to detect that no more values remain to be tested.

Loops like these are used when there are two separate reasons for

them to end: either there are no more values to

use—at-end—or some kind of special value has

been encountered, detected by at-target. If

there are no more values to consider, evaluating

at-target would be meaningless, or, as is

often the case, would cause an error. The and operator is used to “protect” the second part of the test so that

it is evaluated only if the first is true. This is sometimes called

a guard condition.

When a loop can end for more than one reason, the statements after

the while will need to distinguish

the different cases. The simplest and most common case is to return one

value if the loop ended because at-end became

true and a different value if the loop ended because

at-target became true.

Two-condition loops like this occur frequently in code that reads from streams such as files, terminal input, network connections, and so on. That’s because the code cannot know when it has reached the end of the data until it tries to read past it. Before the result of a read can be used, the code must check that something was actually read.

Because readline returns

'\n' when it reads a blank line but

returns '' at the end of a file, it

is sufficient to check to see that it returned a nonempty string.

Repeated calls to readline at the end

of the file will continue to return empty strings, so if its return

value is not tested the loop will never terminate.

Example 4-7 shows a

function that reads the first sequence from a FASTA file. Each time it reads a line it must check first

that the line is not empty, indicating that the end of file has been

reached, and if not, that the line does not begin with '>', indicating the beginning of the next

sequence.

def read_sequence(filename): """Given the name of a FASTA file named filename, read and return its first sequence, ignoring the sequence's description""" seq = '' with open(filename) as file: line = file.readline() while line and line[0] == '>': line = file.readline() while line and line[0] != '>': # must check for end of file seq += line line = file.readline() return seq

Note

Although files can often be treated as collections of lines

using comprehensions or readlines,

in some situations it is more appropriate to loop using readline. This is especially true when

several related functions all read from the same stream.

The bare outline of code that loops over the lines of a file, doing something to each, is shown in the next template.

Iterations

Collections contain objects; that’s more or less all they

do. Some built-in functions—min, max, any, and

all—work for any type of collection.

The operators in and not in accept any type of collection as their

second operand. These functions and operators have something very

important in common: they are based on doing something with each element

of the collection.[22] Since any element of a collection could be its minimum or

maximum value, min and max must consider all the elements. The

operators in and not in and the functions any and all

can stop as soon as they find an element that meets a certain condition,

but if none do they too will end up considering every element of the

collection.

Doing something to each element of a collection is called iteration. We’ve actually already seen

a form of iteration—comprehensions. Comprehensions “do something” to every

element of a collection, collecting the results of that “something” into a

set, list, or dictionary. Comprehensions with multiple for clauses perform

nested iterations. Comprehensions with one or more if

clauses perform conditional iteration: unless an element passes all the

if tests, the “something” will not be

performed for that element and no result will be added.

The code in this book uses comprehensions much more aggressively

than many Python programmers do. You should get comfortable using them,

because in applicable situations they say just what they mean and say it

concisely. Their syntax emphasizes the actions and tests performed on the

elements. They produce collection objects, so the result of a

comprehension can be used in another expression, function call, return statement, etc. Comprehensions help

reduce the littering of code with assignment statements and the names they

bind.

The question, then, is: what kinds of collection manipulations do

not fit the mold of Python’s comprehensions? From the

point of view of Python’s language constructs, the answer is that actions

performed on each element of a collection sometimes must be expressed

using statements, and comprehensions allow only expressions.

Comprehensions also can’t stop before the end of the collection has been

reached, as when a target value has been located. For these and other

reasons Python provides the for

statement to perform general-purpose iteration over collections.

Iteration Statements

Iteration statements all begin with the keyword for. This section shows many ways for statements can be used and “templates”

that summarize the most important.

You will use for statements

often, since so much of your programming will use collections. The

for statement makes its purpose very

clear. It is easy to read and write and minimizes the opportunities for

making mistakes. Most importantly, it works for collections that aren’t

sequences, such as sets and dictionaries. As a matter of fact, the

for statement isn’t even restricted

to collections: it works with objects of a broader range of types that

together are categorized as iterables. (For

instance, we are treating file objects as collections (streams), but technically they are another

kind of iterable.)

Note

The continue and break statements introduced in the section

on loops work for iterations too.

By default, a dictionary iteration uses the dictionary’s keys. If

you want the iteration to use its values, call the values method

explicitly. To iterate with both keys and values at the same time, call

the items method. Typically, when

using both keys and values you would unpack the result of items and assign a

name to each, as shown at the end of the following template.

The previous chapter pointed out that if you need a dictionary’s

keys, values, or items as a list you can call list on the result of the corresponding

method. This isn’t necessary in for

statements—the results of the dictionary methods can be used

directly. keys, values, and items each return an iterable of a different

type—dict_keys, dict_values, and dict_items, respectively—but this difference

almost never matters, since the results of calls to these methods are

most frequently used in for

statements and as arguments to list.

Sometimes it is useful to generate a sequence of integers along

with the values over which a for

statement iterates. The function enumerate(iterable) generates tuples of the form (n, value), with n starting

at 0 and incremented with each value

taken from the iterable. It is rarely used anywhere but in a for statement.

A common use for enumerate is

to print out the elements of a collection along with a sequence of

corresponding numbers. The “do something” line of the template becomes a

call to print like the following:

print(n, value, sep='\t')

Kinds of Iterations

Most iterations conform to one of a small number of patterns. Templates for these patterns and examples of their use occupy most of the rest of this chapter. Many of these iteration patterns have correlates that use loops. For instance, Example 4-3 is just like the Collect template described shortly. In fact, anything an iteration can do can be done with loops. In Python programming, however, loops are used primarily to deal with external events and to process files by methods other than reading them line by line.

Note

Iteration should always be preferred over looping. Iterations are a clearer and more concise way to express computations that use the elements of collections, including streams. Writing an iteration is less error-prone than writing an equivalent loop, because there are more details to “get right” in coding a loop than in an iteration.

Do

Often, you just want to do something to every element of a collection. Sometimes that means calling a function and ignoring its results, and sometimes it means using the element in one or more statements (since statements don’t have results, there’s nothing to ignore).

A very useful function to have is one that prints every element

of a collection. When something you type into the interpreter returns

a long collection, the output is usually difficult to read.

Using pprint.pprint helps,

but for simple situations the solution demonstrated in Example 4-8 suffices. Both

pprint and this definition can be

used in other code too, of course.

def print_collection(collection): for item in collection: print(item) print()

Actually, even this action could be expressed as a comprehension:

[print(item) for item in collection]

Since print returns None, what you’d get

with that comprehension is a list containing one None value for each item in the collection.

It’s unlikely that you’d be printing a list large enough for it to

matter whether you constructed another one, but in another situation

you might call some other no-result function for a very large

collection. However, that would be silly and inefficient. What you

could do in that case is use a set, instead of list,

comprehension:

{print(item)for item in collection}That way the result would simply be {None}. Really, though, the use of a

comprehension instead of a Do iteration is not a serious suggestion,

just an illustration of the close connection between comprehensions

and iterations.

We can create a generalized “do” function by passing the “something” as a functional argument, as shown in Example 4-9.

The function argument could be a named function. For instance,

we could use do to redefine

print_collection from Example 4-8, as shown in

Example 4-10.

def print_collection(collection): do(collection, print)

This passes a named function as the argument to do. For more ad hoc uses we could pass a

lambda expression, as in Example 4-11.

The way to express a fixed number of repetitions of a block of code is to iterate over a range, as shown in the following template.

Collect

Iterations often collect the results of the “something” that gets done for each element. That means creating a new list, dictionary, or set for the purpose and adding the results to it as they are computed.

Most situations in which iteration would be used to collect results would be better expressed as comprehensions. Sometimes, though, it can be tricky to program around the limitation that comprehensions cannot contain statements. In these cases, a Collect iteration may be more straightforward. Perhaps the most common reason to use a Collect iteration in place of a comprehension or loop is when one or more names are assigned and used as part of the computation. Even in those cases, it’s usually better to extract that part of the function definition and make it a separate function, after which a call to that function can be used inside a comprehension instead of an iteration.

Example 4-12 shows a rewrite of the functions for reading entries from FASTA files in Chapter 3. In the earlier versions, all the entries were read from the file and then put through a number of transformations. This version, an example of the Collect iteration template, reads each item and performs all the necessary transformations on it before adding it to the collection. For convenience, this example also repeats the most succinct and complete comprehension-based definition.

While more succinct, and therefore usually more appropriate for most situations, the comprehension-based version creates several complete lists as it transforms the items. Thus, with a very large FASTA file the comprehension-based version might take a lot more time or memory to execute. After the comprehension-based version is yet another, this one using a loop instead of an iteration. You can see that it is essentially the same, except that it has extra code to read the lines and check for the end of the file.

def read_FASTA_iteration(filename): sequences = [] descr = None with open(filename) as file: for line in file: if line[0] == '>': if descr: # have we found one yet? sequences.append((descr, seq)) descr = line[1:-1].split('|') seq = '' # start a new sequence else: seq += line[:-1] sequences.append((descr, seq)) # add the last one found return sequences def read_FASTA(filename): with open(filename) as file: return [(part[0].split('|'), part[2].replace('\n', '')) for part in [entry.partition('\n') for entry in file.read().split('>')[1:]]] def read_FASTA_loop(filename): sequences = [] descr = None with open(filename) as file: line = file.readline()[:-1] # always trim newline while line: if line[0] == '>': if descr: # any sequence found yet? sequences.append((descr, seq)) descr = line[1:].split('|') seq = '' # start a new sequence else: seq += line line = file.readline()[:-1] sequences.append((descr, seq)) # easy to forget! return sequences

Combine

Sometimes we want to perform an operation on all of the elements of a collection to

yield a single value. An important feature of this kind of iteration

is that it must begin with an initial value. Python has a

built-in sum function but no

built-in product; Example 4-13 defines one.

def product(coll): """Return the product of the elements of coll converted to floats, including elements that are string representations of numbers; if coll has an element that is a string but doesn't represent a number, an error will occur""" result = 1.0 # initialize for elt in coll: result *= float(elt) # combine element with return result # accumulated result

As simple as this definition is, there is no reasonable way to define it just using a comprehension. A comprehension always creates a collection—a set, list, or dictionary—and what is needed here is a single value. This is called a Combine (or, more technically, a “Reduce”[23]) because it starts with a collection and ends up with a single value.

For another example, let’s find the longest sequence in a

FASTA file. We’ll assume we have a function called

read_FASTA, like one of the

implementations shown in Chapter 3. Example 4-13 used a binary operation to

combine each element with the previous result. Example 4-14 uses a

two-valued function instead, but the idea is the same. The inclusion

of an assignment statement inside the loop is an indication that the

code is doing something that cannot be done with a

comprehension.

def longest_sequence(filename): longest_seq = '' for info, seq in read_FASTA(filename): longest_seq = max(longest_seq, seq, key=len) return longest_seq

A special highly reduced form of Combine is Count, where all the iteration does is count the number of elements. It would be used to count the elements in an iterable that doesn’t support length. This template applies particularly to generators: for a generator that produces a large number of items, this is far more efficient than converting it to a list and then getting the length of the list.

One of the most important and frequently occurring kinds of actions on iterables that cannot be expressed as a comprehension is one in which the result of doing something to each element is itself a collection (a list, usually), and the final result is a combination of those results. An ordinary Combine operation “reduces” a collection to a value; a Collection Combine reduces a collection of collections to a single collection. (In the template presented here the reduction is done step by step, but it could also be done by first assembling the entire collection of collections and then reducing them to a single collection.)

Example 4-15 shows an example in which “GenInfo” IDs are extracted from each of several files, and a single list of all the IDs found is returned.

def extract_gi_id(description): """Given a FASTA file description line, return its GenInfo ID if it has one""" if line[0] != '>': return None fields = description[1:].split('|') if 'gi' not in fields: return None return fields[1 + fields.index('gi')] def get_gi_ids(filename): """Return a list of the GenInfo IDs of all sequences found in the file named filename""" with open(filename) as file: return [extract_gi_id(line) for line in file if line[0] == '>'] def get_gi_ids_from_files(filenames): """Return a list of the GenInfo IDs of all sequences found in the files whose names are contained in the collection filenames""" idlst = [] for filename in filenames: idlst += get_gi_ids(filename) return idlst

Search

Another common use of iterations is to search for an element that passes some

kind of test. This is not the same as a combine iteration—the result

of a combination is a property of all the elements of the collection,

whereas a search iteration is much like a search loop. Searching takes

many forms, not all of them iterations, but the one thing you’ll just

about always see is a return statement

that exits the function as soon as a matching element has been found.

If the end of the function is reached without finding a matching

element the function can end without explicitly returning a value,

since it returns None by default.

Suppose we have an enormous FASTA file and we need to extract from it a sequence with a specific GenBank ID. We don’t want to read every sequence from the file, because that could take much more time and memory than necessary. Instead, we want to read one entry at a time until we locate the target. This is a typical search. It’s also something that comprehensions can’t do: since they can’t incorporate statements, there’s no straightforward way for them to stop the iteration early.

As usual, we’ll build this out of several small functions. We’ll define four functions. The first is the “top-level” function; it calls the second, and the second calls the third and fourth. Here’s an outline showing the functions called by the top-level function:

search_FASTA_file_by_gi_id(id, filename)

FASTA_search_by_gi_id(id, fil)

extract_gi_id(line)

read_FASTA_sequence(fil)This opens the file and calls FASTA_search_by_gi_id to do the real work.

That function searches through the lines of the file looking for those

beginning with a '>'. Each time

it finds one it calls get_gi_id to

get the GenInfo ID from the line, if there is one. Then it compares

the extracted ID to the one it is looking for. If there’s a match, it

calls read_FASTA_sequence and

returns. If not, it continues looking for the next FASTA description

line. In turn, read_FASTA_sequence

reads and joins lines until it runs across a description line, at

which point it returns its result. Example 4-16 shows the

definition of the top-level function.

“Top-level” functions should almost always be very simple. They are entry points into the capabilities the other function definitions provide. Essentially, what they do is prepare the information received through their parameters for handling by the functions that do the actual work.

def search_FASTA_file_by_gi_id(id, filename): """Return the sequence with the GenInfo ID ID from the FASTA file named filename, reading one entry at a time until it is found""" id = str(id) # user might call with a number with open(filename) as file: return FASTA_search_by_gi_id(id, file)

Each of the other functions can be implemented in two ways. Both

FASTA_search_by_gi_id and read_FASTA_sequence can be implemented using

a loop or iteration. The simple function get_gi_id can be implemented with a

conditional expression or a conditional statement. Table 4-1 shows both

implementations for FASTA_search_by_gi_id.

The iterative implementation of FASTA_search_by_gi_id treats the file as a

collection of lines. It tests each line to see if it is the one that

contains the ID that is its target. When it finds the line it’s

seeking, it does something slightly different than what the template

suggests: instead of returning the line—the item found—it goes ahead

and reads the sequence that follows it.

Note

The templates in this book are not meant to restrict your code to specific forms: they are frameworks for you to build on, and you can vary the details as appropriate.

The next function—read_FASTA_sequence—shows another variation

of the search template. It too iterates over lines in the file—though

not all of them, since it is called after FASTA_search_by_gi_id has already read many

lines. Another way it varies from the template is that it accumulates

a string while looking for a line that begins with a '>'. When it finds one, it returns the

accumulated string. Its definition is shown in Table 4-2, and the

definition of get_gi_id is shown in

Table 4-3.

A special case of search iteration is where the result returned

is interpreted as a Boolean rather than the item found. Some search

iterations return False when a

match is found, while others return True. The exact form of the function

definition depends on which of those cases it implements. If finding a

match to the search criteria means the function should return False, then the last statement of the

function would have to return True

to show that all the items had been processed without finding a match.

On the other hand, if the function is meant to return True when it finds a match it is usually not

necessary to have a return at the

end, since None will be returned by

default, and None is interpreted as

false in logical expressions. (Occasionally, however, you really need

the function to return a Boolean value, in which case you would end

the function by returning False.)

Here are two functions that demonstrate the difference:

def rna_sequence_is_valid(seq): for base in seq: if base not in 'UCAGucag': return False return True def dna_sequence_contains_N(seq): for base in seq: if base == 'N': return True

Filter

Filtering is similar to searching, but instead of returning a result the first

time a match is found, it does something with each element for which

the match was successful. Filtering doesn’t stand on its own—it’s a

modification to one of the other kinds of iterations. This section

presents templates for some filter iterations. Each just adds a

conditional to one of the other templates. The condition is shown

simply as test item, but in practice that test could be

complex. There might even be a few initialization statements before

the conditional.

An obvious example of a Filtered Do is printing the header lines from a FASTA file. Example 4-17 shows how this would be implemented.

def print_FASTA_headers(filename): with open(filename) as file: for line in file: if line[0] == '>': print(line[1:-1])

As with Collect iterations in general, simple situations can be handled with comprehensions, while iterations can handle the more complex situations in which statements are all but unavoidable. For example, extracting and manipulating items from a file can often be handled by comprehensions, but if the number of items is large, each manipulation will create an unnecessarily large collection. Rather than collecting all the items and performing a sequence of operations on that collection, we can turn this inside out, performing the operations on one item and collecting only the result.

In many cases, once a line passes the test the function should

not return immediately. Instead, it should continue to read lines,

concatenating or collecting them, until the next time the test is

true. An example would be with FASTA-formatted files, where a function

might look for all sequence descriptions that contain a certain

string, then read all the lines of the sequences that follow them.

What’s tricky about this is that the test applies only to the lines

beginning with '>'. The lines of

a sequence do not provide any information to indicate whether they

should be included or not.

Really what we have here are two tests: there’s a preliminary

test that determines whether the primary test should be performed.

Neither applies to the lines that follow a description line in the

FASTA file, though. To solve this problem, we add a flag to govern the

iteration and set it by performing the primary test whenever the

preliminary test is true. Example 4-18 shows a function

that returns the sequence strings for all sequences whose descriptions

contain the argument string.

def extract_matching_sequences(filename, string): """From a FASTA file named filename, extract all sequences whose descriptions contain string""" sequences = [] seq = '' with open(filename) as file: for line in file: if line[0] == '>': if seq: # not first time through sequences.append(seq) seq = '' # next sequence detected includeflag = string in line # flag for later iterations else: if includeflag: seq += line[:-1] if seq: # last sequence in file is included sequences.append(seq) return sequences

The generalization of this code is shown in the following template.

A Filtered Combine is just like a regular Combine, except only elements that pass the test are used in the combining expression.

Example 4-13 showed a definition

for product. Suppose the collection

passed to product contained nonnumerical

elements. You might want the product function to skip nonnumerical values

instead of converting string representations of numbers to numbers.[24]

All that’s needed to skip nonnumerical values is a test that

checks whether the element is an integer or float and ignores it if it

is not. The function isinstance was

described briefly in Chapter 1; we’ll use that

here to check for numbers. Example 4-19 shows this new

definition for product.

def is_number(value): """Return True if value is an int or a float""" return isinstance(elt, int) or isinstance(elt, float) def product(coll): """Return the product of the numeric elements of coll""" result = 1.0 # initialize for elt in coll: if is_number(elt): result = result * float(elt) # combine element with accumulated result return result

What we’ve done here is replace the template’s

test with a call to is_number to perform the test. Suppose we

needed different tests at different times while computing the

product—we might want to ignore zeros or negative numbers, or we might

want to start at a different initial value (e.g., 1 if computing the product of only

integers). We might even have different actions to perform each time

around the iteration. We can implement many of these templates as

function definitions whose details are specified by parameters. Example 4-20 shows a completely general

combine function.

def combine(coll, initval, action, filter=None): """Starting at initval, perform action on each element of coll, finally returning the result. If filter is not None, only include elements for which filter(element) is true. action is a function of two arguments--the interim result and the element--which returns a new interim result.""" result = initval for elt in coll: if not filter or filter(elt): result = action(result, elt) return result

To add all the integers in a collection, we just have to call

combine with the right arguments:

combine(coll

0,

lambda result, elt: result + elt,

lambda elt: isinstance(elt, int)

)Nested iterations

One iteration often uses another. Example 4-21 shows a simple case—listing all the sequence IDs in files whose names are in a collection.

def list_sequences_in_files(filelist): """For each file whose name is contained in filelist, list the description of each sequence it contains""" for filename in filelist: print(filename) with open(filename) as file: for line in file: if line[0] == '>': print('\t', line[1:-1])

Nesting is not a question of physical containment of one piece

of code inside another. Following the earlier recommendation to write

short, single-purpose functions, Example 4-22 divides the previous

function, placing one iteration in each. This is still a nested

iteration, because the first function calls the second each time

around the for, and the second has

its own for statement.

def list_sequences_in_files(filelist): """For each file whose name is contained in filelist, list the description of each sequence it contains""" for filename in filelist: print(filename) with open(filename) as file: list_sequences_in_file(file) def list_sequences_in_file(file) for line in file: if line[0] == '>': print('\t', line[1:-1])

These examples do present nested iterations, but they don’t show what’s special about this kind of code. Many functions that iterate call other functions that also iterate. They in turn might call still other functions that iterate. Nested iterations are more significant when their “do something” parts involve doing something with a value from the outer iteration and a value from the inner iteration together.

Perhaps a batch of samples is to be submitted for sequencing with each of a set of primers:

for seq in sequences: for primer in primers: submit(seq, primer)

This submits a sequence and a primer for every combination of a

sequence from sequences and a primer from primers. In this case it doesn’t matter

which iteration is the outer and which is the inner, although if they

were switched the sequence/primer pairs would be submitted in a

different order.

Three-level iterations are occasionally useful—especially in bioinformatics programming, because codons consist of three bases. Example 4-23 shows a concise three-level iteration that prints out a simple form of the DNA codon table.

def print_codon_table():

"""Print the DNA codon table in a nice, but simple, arrangement"""

for base1 in DNA_bases: # horizontal section (or "group")

for base3 in DNA_bases: # line (or "row")

for base2 in DNA_bases: # vertical section (or "column")

# the base2 loop is inside the base3 loop!

print(base1+base2+base3,

translate_DNA_codon(base1+base2+base3),

end=' ')

print()

print()

>>> print_codon_table()

TTT Phe TCT Ser TAT Tyr TGT Cys

TTC Phe TCC Ser TAC Tyr TGC Cys

TTA Leu TCA Ser TAA --- TGA ---

TTG Leu TCG Ser TAG --- TGG Trp

CTT Leu CCT Pro CAT His CGT Arg

CTC Leu CCC Pro CAC His CGC Arg

CTA Leu CCA Pro CAA Gln CGA Arg

CTG Leu CCG Pro CAG Gln CGG Arg

ATT Ile ACT Thr AAT Asn AGT Ser

ATC Ile ACC Thr AAC Asn AGC Ser

ATA Ile ACA Thr AAA Lys AGA Arg

ATG Met ACG Thr AAG Lys AGG Arg

GTT Val GCT Ala GAT Asp GGT Gly

GTC Val GCC Ala GAC Asp GGC Gly

GTA Val GCA Ala GAA Glu GGA Gly

GTG Val GCG Ala GAG Glu GGG GlyRecursive iterations

Trees are an important class of data structure in computation: they provide the generality needed to represent branching information. Taxonomies and filesystems are good examples. A filesystem starts at the top-level directory of, say, a hard drive. That directory contains files and other directories, and those directories in turn contain files and other directories. The whole structure consists of just directories and files.

A data structure that can contain other instances of itself is said to be recursive. The study of recursive data structures and algorithms to process them is a major subject in computer science. Trees are the basis of some important algorithms in bioinformatics too, especially in the areas of searching and indexing.

While we won’t be considering such algorithms in this book, it

is important to know some rudimentary techniques for tree

representation and iteration. A simple while or for statement can’t by itself follow all the

branches of a tree. When it follows one branch, it may encounter

further branches, and at each juncture it can follow only one at a

time. It can only move on to the next branch after it’s fully explored

everything on the first one. In the meantime, it needs someplace to

record a collection of the remaining branches to be processed.

Each branch is just another tree. A function that processes a tree can call itself to process each of the tree’s branches. What stops this from continuing forever is that eventually subtrees are reached that have no branches; these are called leaves. A function that calls itself—or calls another function that eventually calls it—is called a recursive function.

Discussions of recursion are part of many programming texts and courses. It often appears mysterious until the idea becomes familiar, which can take some time and practice. One of the advantages of recursive functions is that they can express computations more concisely than other approaches, even when recursion isn’t actually necessary. Sometimes the code is so simple you can hardly figure out how it does its magic!

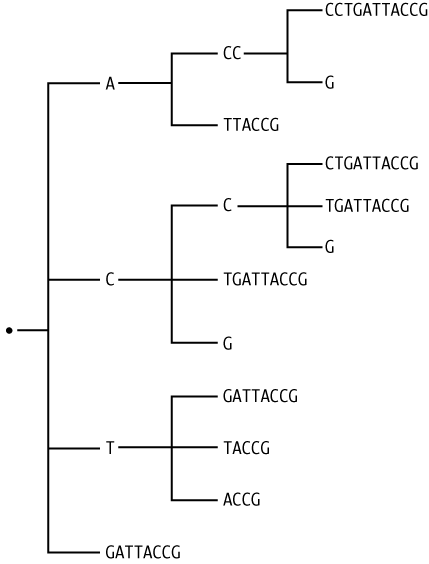

First, we’ll look at an example of one of the ways trees are used in bioinformatics. Some very powerful algorithms used in indexing and searching genomic sequences rely on what are called suffix trees. These are tree structures constructed so that every path from the root to a leaf produces a subsequence that is not the prefix of any other subsequence similarly obtained. The entire string from which the tree was constructed can be recovered by traversing all paths to leaf nodes, concatenating the strings encountered along the way, and collecting the strings obtained from each path. The longest string in the resulting collection is the original string. Figure 4-2 shows an example.

Algorithms have been developed for constructing and navigating such trees that do their work in an amount of time that is directly proportional to the length of the sequence. Normally algorithms dealing with tree-structured data require time proportional to N2 or at best N log N, where N is the length of the sequence. As N gets as large as is often required for genomic sequence searches, those quantities grow impossibly large. From this point of view the performance of suffix tree algorithms borders on the miraculous.

Our example will represent suffix trees as lists of lists of lists of... lists. The first element of each list will always be a string, and each of the rest of the elements is another list. The top level of the tree starts with an empty string. Example 4-24 shows an example hand-formatted to reflect the nested relationships.

['',

['A',

['CC',

['CCTGATTACCG'],

['G']

],

['TTACCG']

],

['C',

['C',

['CTGATTACCG'],

['TGATTACCG'],

['G']

],

['TGATTACCG'],

['G']

],

['T',

['GATTACCG'],

['TACCG'],

['ACCG']

],

['GATTACCG']

]Let’s assign tree1 to this

list and see what Python does with it. Example 4-25 shows an

ordinary interpreter printout of the nested lists.

['', ['A', ['CC', ['CCTGATTACCG'], ['G']], ['TTACCG']], ['C', ['C', ['CTGATTACCG'], ['TGATTACCG'], ['G']], ['TGATTACCG'], ['G']], ['T', ['GATTACCG'], ['TACCG'], ['ACCG']], ['GATTACCG']]

That output was one line, wrapped. Not very helpful. How much of

an improvement does pprint.pprint

offer?

>>> pprint.pprint(tree1)

['',

['A', ['CC', ['CCTGATTACCG'], ['G']], ['TTACCG']],

['C', ['C', ['CTGATTACCG'], ['TGATTACCG'], ['G']], ['TGATTACCG'], ['G']],

['T', ['GATTACCG'], ['TACCG'], ['ACCG']],

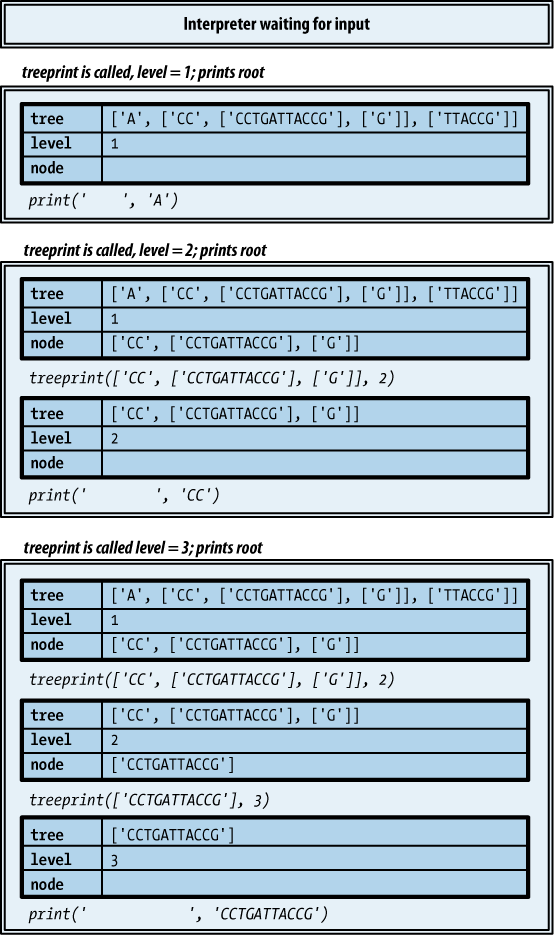

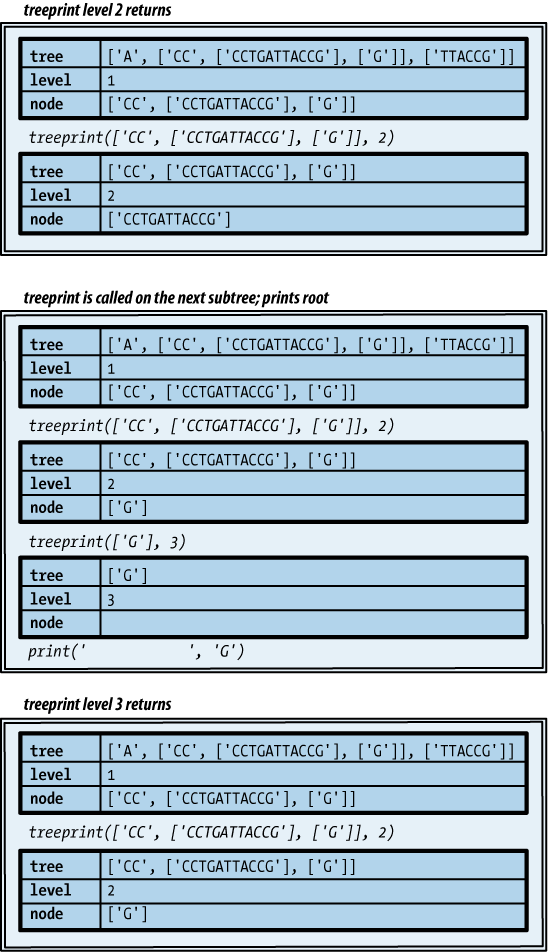

['GATTACCG']]This is a little better, since we can at least see the top-level structure. But what we want is output that approximates the tree shown in Figure 4-2. (We won’t go so far as to print symbols for lines and corners—we’re just looking to reflect the overall shape of the tree represented by the data structure.) Here’s the template for a recursive function to process a tree represented as just described here. (The information the tree contains could be anything, not just strings: whatever value is placed in the first position of the list representing a subtree is the value of that subtree’s root node.)

Do you find it difficult to believe that so simple a template can process a tree? Example 4-26 shows how it would be used to print our tree.

def treeprint(tree, level=0): print(' ' * 4 * level, tree[0], sep='') for node in tree[1:]: treeprint(node, level+1)

This produces the following output for the example tree. It’s not as nice as the diagram; not only are there no lines, but the root of each subtree is on a line before its subtrees, rather than centered among them. Still, it’s not bad for four lines of code!

A

CC

CCTGATTACCG

G

TTACCG

C

C

CTGATTACCG

TGATTACCG

G

TGATTACCG

G

T

GATTACCG

TACCG

ACCG

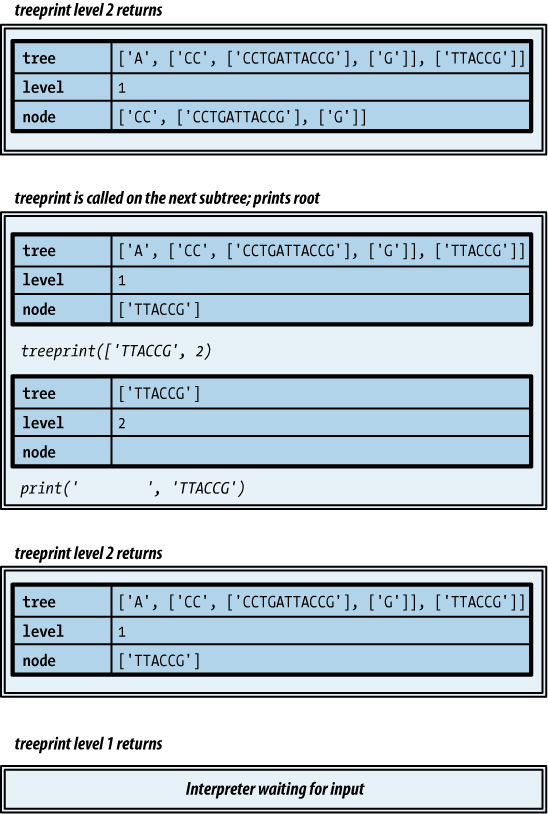

GATTACCGFigures 4-3, 4-4, and 4-5 illustrate the process that ensues

as the function in Example 4-26 does its work

with the list representing the subtree rooted at A.

Exception Handlers

Let’s return to Example 4-15, from our discussion of collection iteration. We’ll add a top-level function to drive the others and put all of the functions in one Python file called get_gi_ids.py. The contents of the file are shown in Example 4-27.

def extract_gi_id(description): """Given a FASTA file description line, return its GenInfo ID if it has one""" if line[0] != '>': return None fields = description[1:].split('|') if 'gi' not in fields: return None return fields[1 + fields.index('gi')] def get_gi_ids(filename): """Return a list of GenInfo IDs from the sequences in the FASTA file named filename""" with open(filename) as file: return [extract_gi_id(line) for line in file if line[0] == '>'] def get_gi_ids_from_files(filenames): """Return a list of GenInfo IDs from the sequences in the FASTA files whose names are in the collection filenames""" idlst = [] for filename in filenames: idlst += get_gi_ids(filename) return idlst def get_gi_ids_from_user_files(): response = input("Enter FASTA file names, separated by spaces: ") lst = get_gi_ids_from_files(response.split()) # assuming no spaces in file names lst.sort() print(lst) get_gi_ids_from_user_files()

We run the program from the command line, enter a few filenames, and get the results shown in Example 4-28.

%python get_gi_ids.pyEnter a list of FASTA filenames:aa1.fasta aa2.fasta aa3.fastaTraceback (most recent call last): File "get_gi_ids.py", line 27, in <module> get_gi_ids_from_user_files File "get_gi_ids.py", line 23, in get_gi_ids_from_user_files lst = get_gi_ids_from_files(files)) File "get_gi_ids.py", line 18, in get_gi_ids_from_files idlst += get_gi_ids(filename) File "get_gi_ids.py", line 10, in get_gi_ids with open(filename) as file: File "/usr/local/lib/python3.1/io.py", line 278, in __new__ return open(*args, **kwargs) File "/usr/local/lib/python3.1/io.py", line 222, in open closefd) File "/usr/local/lib/python3.1/io.py", line 619, in __init__ _fileio._FileIO.__init__(self, name, mode, closefd) IOError: [Errno 2] No such file or directory: 'aa2.fasta'

Python Errors

If you’ve executed any Python code you have written, you have probably already seen output like that in the previous example splattered across your interpreter or shell window. Now it’s time for a serious look at what this output signifies. It’s important to understand more than just the message on the final line and perhaps a recognizable filename and line number or two.

Tracebacks

As its first line indicates, the preceding output shows details of

pending functions. This display of information is called a traceback. There are two lines for

each entry. The first shows the name of the function that was called,

the path to the file in which it was defined, and the line number

where its definition begins, though not in that order. (As in this

case, you will often see <module> given as the module name on

the first line; this indicates that the function was called from the

top level of the file being run by Python or directly from the

interpreter.) The second line of each entry shows the text of the line

identified by the filename and line number of the first line, to save

you the trouble of going to the file to read it.

Note

Some of this will make sense to you now. Some of it won’t until you have more Python knowledge and experience. As the calls descend deeper into Python’s implementation, some technical details are revealed that we haven’t yet explored. It’s important that you resist the temptation to dismiss tracebacks as hopelessly complicated and useless. Even if you don’t understand all the details, tracebacks tell you very clearly what has happened, where, and, to some extent, why.

The problem causing the traceback in this example is clear

enough: the user included the file aa2.fasta in the list of files to be

processed, but when get_gi_id went

to open that file it couldn’t find it. As a result, Python reported an

IOError and stopped executing. It

didn’t even print the IDs that it had already found—it just

stopped.

Runtime errors

Is this what you want your program to do? You can’t prevent the user

from typing the name of a nonexistent file. While you could check that

each file exists before trying to open it (using methods from the

os module that we’ll be looking in

Chapter 6), this is only one of the many things

that could go wrong during the execution of your program. Maybe the

file exists but you don’t have read privileges for it, or it exists

but is empty, and you didn’t write your code to correctly handle that

case. Maybe the program encounters an empty line at the end of

the file and tries to extract pieces from it. Maybe the program tries

to compare incompatible values in an expression such as 4 < '5'.

By now you’ve probably encountered ValueError, TypeError, IndexError, IOError, and perhaps a few others. Each of

these errors is actually a type. Table 4-4 shows examples of common errors,

the type of error instance that gets created when they occur, and

examples of the messages that get printed.

Example | Error class | Message |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

|

| |

|

|

|

|

| |

[a] The second argument of [b] [c] Typing Ctrl-D on an empty line (Ctrl-Z on Windows)

ends input. Remember, though, that [d] Pressing Ctrl-C twice stops whatever Python is doing and returns to the interpreter. | ||

Even if get_gi_ids was

written to detect nonexistent files before trying to open them, what

should it do if it detects one? Should it just return None? Should it print its own error message

before returning None? If it

returns None, how can the function

that called it know whether that was because the file didn’t exist,

couldn’t be read, wasn’t a FASTA-formatted file, or just didn’t have

any sequences with IDs? If each function has to report to its caller

all the different problems it might have encountered, each caller will

have to execute a series of conditionals checking each of those

conditions before continuing with its own executions.

To manage this problem, languages provide exception handling mechanisms. These make it possible to ignore exceptions when writing most function definitions, while specifically designating, in relatively few places, what should happen when exceptions do occur. The term “exception” is used instead of “error” because if the program is prepared to handle a situation, it isn’t really an error when it arises. It becomes an error—an unhandled exception—if the program does not detect the situation. In that case, execution stops and Python prints a traceback with a message identifying the type of error and details about the problem encountered.

Exception Handling Statements

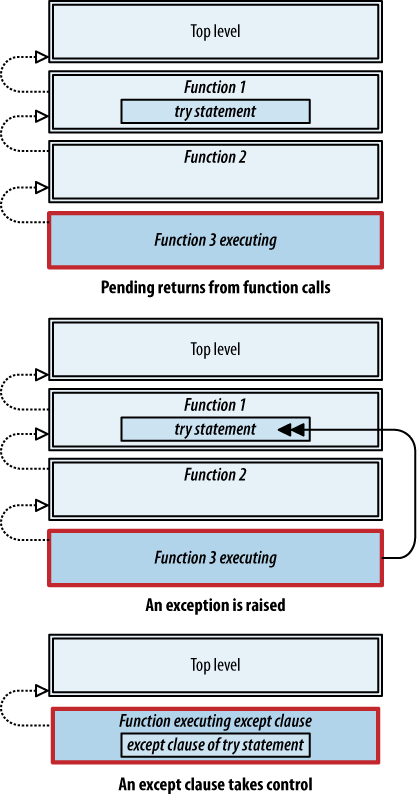

Python’s exception handling mechanism is implemented through the try statement. This looks and works much like

a conditional, except that the conditions are not tests you write, but

rather names of error classes.

The error class is one of the error names you’ll see printed out

on the last line of a traceback: IOError, ValueError, and so on. When a try statement begins, it starts executing the statements in the

try-statements block. If they complete

without any errors, the rest of the try statement is skipped and execution

continues at the next statement.

However, if an error of the type identified in the except clause occurs

during the execution of the try

block, something quite different happens: the call stack is “unwound” by

removing the calls to the functions “below” the one that contains the

try statement. Any of the

try-statements that haven’t yet executed are

abandoned. Execution continues with the statements in the except clause, and then moves on to the

statement that follows the entire try/except statement. Figures 4-6

and 4-7 show the difference.

Optional features of exception handling statements

The try statement offers

quite a few options. The difficulty here is not so much in

comprehending all the details, although that does take some time. The

real challenge is to develop a concrete picture of how control “flows”

through function calls and Python’s various kinds of statements. Then

you can begin developing an understanding of the very different flow

induced by exceptions.

Now that we know how to handle errors, what changes might we

want to make in our little program for finding IDs? Suppose we’ve

decided we want the program to print an error message whenever it

fails to open a file, but then continue with the next one. This is

easily accomplished with one simple try statement:

def get_gi_ids(filename): try: with open(filename) as file: return [extract_gi_id(line) for line in file if line[0] == '>'] except IOError: print('File', filename, 'not found or not readable.') return []

Note that the except clause

returns an empty list rather than returning None or allowing the function to end without

a return (which amounts to the same

thing). This is because the function that calls this one will be

concatenating the result with a list it is accumulating, and since

None isn’t a sequence it can’t be

added to one. (That’s another TypeError you’ll often see, usually as

a result of forgetting to return a value from a function.) If you’ve

named the exception with as

name, you can print(name) instead of or in addition to your own message.

Incidentally, this with

statement:

with open('filename') as file:usefile

is roughly the same as:

try: file = open('filename')usefile finally: file.close()

The finally clause guarantees

that the file will be closed whether an error happens or not—and

without the programmer having to remember to close it. This is a great

convenience that avoids several kinds of common problems. The with statement requires only one line in

addition to the statements to be executed, rather than the four lines

required by the try version.

Exception handling and generator objects

An important special use of try

statements is with generator objects. Each call to next with a generator object as its argument

produces the generator’s next value. When there are no more values,

next returns the value of its

optional second argument, if one is provided. If not, a StopIteration error is raised.

There are two ways to use next: either you can provide a default value

and compare it to the value next

returns each time, or you can omit the argument and put the call to

next inside a try that has an except StopIteration clause. (An except clause with no exception class or a

finally would also catch the

error.)

An advantage of the exception approach is that the try statement that catches it can be several

function calls back; also, you don’t have to check the value returned

by next each time. This is

particularly useful when one function calls another that calls

another, and so on. A one-argument call to next in the innermost function and a

try statement in the top-level

function will terminate the entire process and hand control back to

the top-level function, which catches StopIteration.

Raising Exceptions

Exception raising isn’t limited to library functions—your code can raise them too.

The raise statement

The raise statement

is used to raise an exception and initiate exception

handling.

The exception-expression can be any

expression whose value is either an exception class or an instance of

one. If it is an exception class, the statement simply creates an

instance for you. Creating your own instance allows you to specify

arguments to the new instance—typically a message providing more

detail about the condition encountered. The class Exception can

be used for whatever purposes you want, and it can take an arbitrary

number of arguments. You can put statements like this in your

code:

raise Exception('File does not appear to be in FASTA format.', filename)The statements in any of a try statement’s

exception clauses can “reraise” an exception using a raise statement with no expression. In that

case, the stack unwinding resumes and continues until the next

try statement prepared to handle

the exception is encountered.

Not only can your code raise exceptions, but you can create your own exception classes and raise instances of those. (The next chapter shows you how to create your own classes.) It’s especially important for people building modules for other people to use, since code in a module has no way of knowing what code from the outside wants to do when various kinds of problems are encountered. The only reasonable thing to do is design modules to define appropriate exception classes and document them for users of the module so they know what exceptions their code should be prepared to handle.

Raising an exception to end a loop

The point was made earlier that exceptions aren’t necessarily errors. You

can use a combination of try and

raise statements as an alternative

way of ending loops. You would do this if you had written a long

sequence of functions that call each other, expecting certain kinds of

values in return. When something fails deep down in a sequence of

calls it can be very awkward to return None or some other failure value back

through the series of callers, as each of them would have to test the

value(s) it got back to see whether it should continue or itself

return None. A common example is

repeatedly using str.find in many

different functions to work through a large string.

Using exception handling, you can write code without all that

distracting error reporting and checking. Exceptional situations can

be handled by raising an error. The first function called can have a

“while-true” loop inside a try

statement. Whenever some function determines that nothing remains to

process, it can throw an exception. A good exception class for this

purpose is StopIteration,

which is used in the implementation of generators,

while-as statements, and other mechanisms

we’ve seen:

try: while(True):begin complicated multi-function input processingexcept StopIteration: pass... many definitions of functions that call each other; ...... wherever one detects the end of input, it does: ...raise StopIteration

Extended Examples

This section presents some extended examples that make use of the constructs described earlier in the chapter.

Extracting Information from an HTML File

Our first example in this section is based on the technique just discussed of raising an exception to end the processing of some text. Consider how you would go about extracting information from a complex HTML page. For example, go to NCBI’s Entrez Gene site (http://www.ncbi.nlm.nih.gov/sites/entrez), enter a gene name in the search field, click the search button, and then save the page as an HTML file. Our example uses the gene vWF.[25] Example 4-29 shows a program for extracting some information from the results returned. The patterns it uses are very specific to results saved from Entrez Gene, but the program would be quite useful if you needed to process many such pages.

endresults = '- - - - - - - - end Results - - - - - -'

patterns = ('</em>]',

'\n',

'</a></div><div class="rprtMainSec"><div class="summary">',

)

def get_field(contents, pattern, endpos):

endpos = contents.rfind(pattern, 0, endpos)

if endpos < 0:

raise StopIteration

startpos = contents.rfind('>', 0, endpos)

return (endpos, contents[startpos+1:endpos])

def get_next(contents, endpos):

fields = []

for pattern in patterns:

endpos, field = get_field(contents, pattern, endpos)

fields.append(field)

fields.reverse()

return endpos, fields

def get_gene_info(contents):

lst = []

endpos = contents.rfind(endresults, 0, len(contents))

try:

while(True):

endpos, fields = get_next(contents, endpos)

lst.append(fields)

except StopIteration:

pass

lst.reverse()

return lst

def get_gene_info_from_file(filename):

with open(filename) as file:

contents = file.read()

return get_gene_info(contents)

def show_gene_info_from_file(filename):

infolst = get_gene_info_from_file(filename)

for info in infolst:

print(info[0], info[1], info[2], sep='\n ')

if __name__ == '__main__':

show_gene_info_from_file(sys.argv[1]

if len(sys.argv) > 1

else 'EntrezGeneResults.html')Output for the first page of the Entrez Gene results for vWF looks like this:

Vwf

Von Willebrand factor homolog

Mus musculus

VWF

von Willebrand factor

Homo sapiens

VWF

von Willebrand factor

Canis lupus familiaris

Vwf

Von Willebrand factor homolog

Rattus norvegicus

VWF

von Willebrand factor

Bos taurus

VWF

von Willebrand factor

Pan troglodytes

VWF

von Willebrand factor

Macaca mulatta

vwf

von Willebrand factor

Danio rerio

VWF

von Willebrand factor

Gallus gallus

VWF

von Willebrand factor

Sus scrofa

Vwf

lectin

Bombyx mori

VWF

von Willebrand factor

Oryctolagus cuniculus

VWF

von Willebrand factor

Felis catus

VWF

von Willebrand factor

Monodelphis domestica

VWFL2

von Willebrand Factor like 2

Ciona intestinalis

ADAMTS13

ADAM metallopeptidase with thrombospondin type 1 motif, 13

Homo sapiens

MADE_03506

Secreted protein, containing von Willebrand factor (vWF) type A domain

Alteromonas macleodii 'Deep ecotype'

NOR51B_705

putative secreted protein, containing von Willebrand factor (vWF) type A domain

gamma proteobacterium NOR51-B

BLD_1637

von Willebrand factor (vWF) domain containing protein

Bifidobacterium longum DJO10A

NOR53_416

secreted protein, containing von Willebrand factor (vWF) type A domain

gamma proteobacterium NOR5-3This code was developed in stages. The first version of the

program had separate functions get_symbol, get_name, and get_species. Once they were cleaned up and

working correctly it became obvious that they each did the same thing,

just with a different pattern. They were therefore replaced with a

single function that had an additional parameter for the search

pattern.

The original definition of get_next contained repetitious lines. This

definition replaces those with an iteration over a list of patterns.

These changes made the whole program easily extensible. To extract more

fields, we just have to add appropriate search patterns to the patterns list.

It should also be noted that because the second line of some

entries showed an “Official Symbol” and “Name” but others didn’t, it

turned out to be easier to search backward from the end of the file. The

first step is to find the line demarcating the end of the results. Then

the file contents are searched in reverse for each pattern in turn, from

the beginning of the file to where the last search left off. (Note that

although you might expect it to be the other way around, the arguments

to rfind are interpreted just like

the arguments to find, with the

second less than the third.)

The Grand Unified Bioinformatics File Parser

This section explores some ways the process of reading information from text files can be generalized.

Reading the sequences in a FASTA file

Example 4-30 presents

a set of functions for reading the sequences in a FASTA file. They are actually quite general, and can

work for a variety of the kinds of formats typically seen in

bioinformatics. The code is a lot like what we’ve seen in earlier

examples. All that is needed to make these functions work for a

specific file format is an appropriate definition of skip_intro and next_item.

def get_items_from_file(filename, testfn=None): """Return all the items in the file named filename; if testfn then include only those items for which testfn is true""" with open(filename) as file: return get_items(file, testfn) def find_item_in_file(filename, testfn=None): """Return the first item in the file named filename; if testfn then return the first item for which testfn is true""" with open(filename) as file: return find_item(file, testfn) def find_item(src, testfn): """Return the first item in src; if testfn then return the first item for which testfn is true""" gen = item_generator(src, testfn) item = next(gen) if not testfn: return item else: try: while not testfn(item): item = next(gen) return item except StopIteration: return None def get_items(src, testfn=None): """Return all the items in src; if testfn then include only those items for which testfn is true""" return [item for item in item_generator(src) if not testfn or testfn(item)] def item_generator(src): """Return a generator that produces a FASTA sequence from src each time it is called""" skip_intro(src) seq = '' description = src.readline().split('|') line = src.readline() while line: while line and line[0] != '>': seq += line line = src.readline() yield (description, seq) seq = '' description = line.split('|') line = src.readline() def skip_intro(src): """Skip introductory text that appears in src before the first item""" pass # no introduction in a FASTA file

The functions get_items_from_file and find_item_in_file simply take a filename and

call get_items and find_item, respectively. If you already have

an open file, you can pass it directly to get_items or find_item. All four functions take an

optional filter function. If one is provided, only items for which the

function returns true are included. Typically, a filter function like

this would be a lambda expression. Note that find_item can be called repeatedly on the

same open file, returning the next item for which testfn is true, because after the first one

is found the rest of the source is still available for reading.

next_item is a generator

version of the functions we’ve seen for reading FASTA entries. It

reads one entry each time it is called, returning the split

description line and the sequence as a pair. This function and

possibly skip_intro would need to

be defined differently for different file formats. The other four

functions stay the same.

Generalized parsing

Extracting a structured representation from a text file is known as parsing. Python, for example, parses text typed at the interpreter prompt or imported from a module in order to convert it into an executable representation according to the language’s rules. Much of bioinformatics programming involves parsing files in a wide variety of formats. Despite the ways that formats differ, programs to parse them have a substantial underlying similarity, as reflected in the following template.

Parsing GenBank Files

Next, we’ll look at an example of applying the generalized parser template to read features and sequences from GenBank flat files.[26] There are many ways to navigate in a browser to get a page in GenBank format from the NCBI website.[27] For instance, if you know the GenInfo Identifier (GI), you can get to the corresponding GenBank record using the URL http://www.ncbi.nlm.nih.gov/nuccore/ followed by the GI number. Then, to download the page as a flat text file, simply click on the “Download” drop-down on the right side of the page just above the name of the sequence and select “GenBank” as the format. The file will be downloaded as sequence.gb to your browser’s default download directory.

There’s a great deal of information in these GenBank entries. For

this example we just want to extract the accession code, GI number,

feature information, and sequence. Example 4-31 shows the code needed

to implement the format-specific part of the unified parser template:

skip_intro and next_item. For a given format, the

implementation of either of these two functions may require other

supporting functions.